大数据OmniMV ClickHouse 性能测试指南

发表于 2025/09/22

0

作者 | 朱勇

1 环境要求

软件环境要求:

|

软件 |

版本 |

获取方法 |

|

毕昇JDK |

jdk8u342 |

获取链接 |

|

ZooKeeper |

3.4.6 |

获取链接 |

|

ClickHouse |

22.3.6.5 |

|

节点规划(3个节点, 3个分片, 无副本):

|

主机名 |

IP地址 |

分片 |

|

agent1 |

192.168.xx.1 |

shard1 |

|

agent2 |

192.168.xx.2 |

shard2 |

|

agent3 |

192.168.xx.3 |

shard3 |

本文档基于agent1~3的IP地址编写,使用时注意IP地址的更换。

2 配置ClickHouse部署环境

1、依次登录节点。使用以下命令时需要将节点主机名分别修改为agent1、agent2、agent3。

hostnamectl set-hostname 主机名 --static

2、添加集群所有节点的映射关系。

1) 打开“/etc/hosts”文件。

vim /etc/hosts

2) 在hosts文件中添加以下内容。

IPaddress1 agent1

IPaddress2 agent2

IPaddress3 agent3

3) 按“Esc”键,输入:wq!,按“Enter”保存并退出编辑。

3、登录所有节点关闭防火墙。

systemctl stop firewalld.service

systemctl disable firewalld.service

4、登录所有节点配置SSH免密登录。

1) 生成密钥,遇到提示按“Enter”键。

ssh-keygen -t rsa

2) 在每台机器配置SSH免密登录(包括配置自身的免密)。

ssh-copy-id -i ~/.ssh/id_rsa.pub root@节点IP

5、安装JDK。

1) 获取毕昇JDK。

wget https://mirror.iscas.ac.cn/kunpeng/archive/compiler/bisheng_jdk/bisheng-jre-8u342-linux-aarch64.tar.gz

tar -zxf bisheng-jre-8u342-linux-aarch64.tar.gz -C /usr/local

cd /usr/local

ln -s bisheng-jdk1.8.0_342 jdk8us42

2) 配置环境变量。

打开“/etc/profile”文件。

vim /etc/profile

添加以下内容。

export JAVA_HOME=/usr/local/jdk8u342 export PATH=$JAVA_HOME/bin:$PATH

使环境变量生效。

source /etc/profile

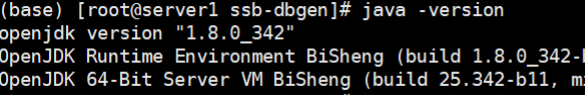

3) 验证。

java -version

3 部署ZooKeeper

1、下载并解压ZooKeeper。

登录agent1节点,进入“/usr/local”目录,下载zookeeper-3.8.0.tar.gz,并解压。

cd /usr/local

wget http://archive.apache.org/dist/zookeeper/zookeeper-3.8.0/apachezookeeper-3.8.0-bin.tar.gz

tar -zxvf apache-zookeeper-3.8.0-bin.tar.gz

2、建立软链接,便于后期版本更换。

ln -s apache-zookeeper-3.8.0-bin zookeeper

3、添加ZooKeeper到环境变量。

1) 登录agent1节点,打开配置文件。

vim /etc/profile

2) 添加ZooKeeper到环境变量。

export ZOOKEEPER_HOME=/usr/local/zookeeper export PATH=$ZOOKEEPER_HOME/bin:$PATH

3) 使环境变量生效。

source /etc/profile

4) 在agent2,agent3节点重复执行以上步骤,配置ZooKeeper的环境变量。

4、修改Zookeeper配置文件。

1) 登录agent1节点,进入ZooKeeper所在目录。

cd /usr/local/zookeeper/conf

2) 拷贝配置文件。

cp zoo_sample.cfg zoo.cfg

3) 修改配置文件。

vim zoo.cfg

dataDir=/usr/local/zookeeper/tmp

4) 在最后添加如下代码,其中server.1-3是部署ZooKeeper的节点。

server.1=agent1:2888:3888 server.2=agent2:2888:3888 server.3=agent3:2888:3888

5) 创建“tmp”目录作数据目录。

mkdir /usr/local/zookeeper/tmp

6) 在“tmp”目录中创建一个空文件,并向该文件写入ID。

touch /usr/local/zookeeper/tmp/myid

echo 1 > /usr/local/zookeeper/tmp/myid

5、同步配置到其他节点。

1) 登录agent1节点,将配置好的ZooKeeper拷贝到其它节点。

scp -r /usr/local/zookeeper root@agent2:/usr/local

scp -r /usr/local/zookeeper root@agent3:/usr/local

2) 登录agent2、agent3,创建软链接并修改myid内容。

agent2:

cd /usr/local

echo 2 > /usr/local/zookeeper/tmp/myid

agent3:

cd /usr/local

echo 3 > /usr/local/zookeeper/tmp/myid

6、运行验证。

1) 分别在agent2,agent3上启动ZooKeeper。

cd /usr/local/zookeeper/bin

./zkServer.sh start

2) 查看ZooKeeper状态。

./zkServer.sh status

4 部署ClickHouse集群

1、获取ClickHouse相关安装包,在agent1~3节点都执行。

mkdir -p /usr/local/clickhouse

cd /usr/local/clickhouse

wget https://packages.clickhouse.com/tgz/lts/clickhouse-server-22.3.6.5arm64.tgz --no-check-certificate

wget https://packages.clickhouse.com/tgz/lts/clickhouse-client-22.3.6.5arm64.tgz --no-check-certificate

wget https://packages.clickhouse.com/tgz/lts/clickhouse-commonstatic-22.3.6.5-arm64.tgz --no-check-certificate

2、解压后执行安装。

tar -zxvf clickhouse-common-static-22.3.6.5-arm64.tg

sudo ./clickhouse-common-static-22.3.6.5/install/doinst.sh

tar -zxvf clickhouse-server-22.3.6.5-arm64.tgz

sudo ./clickhouse-server-22.3.6.5/install/doinst.sh

tar -zxvf clickhouse-client-22.3.6.5-arm64.tgz

sudo ./clickhouse-client-22.3.6.5/install/doinst.sh

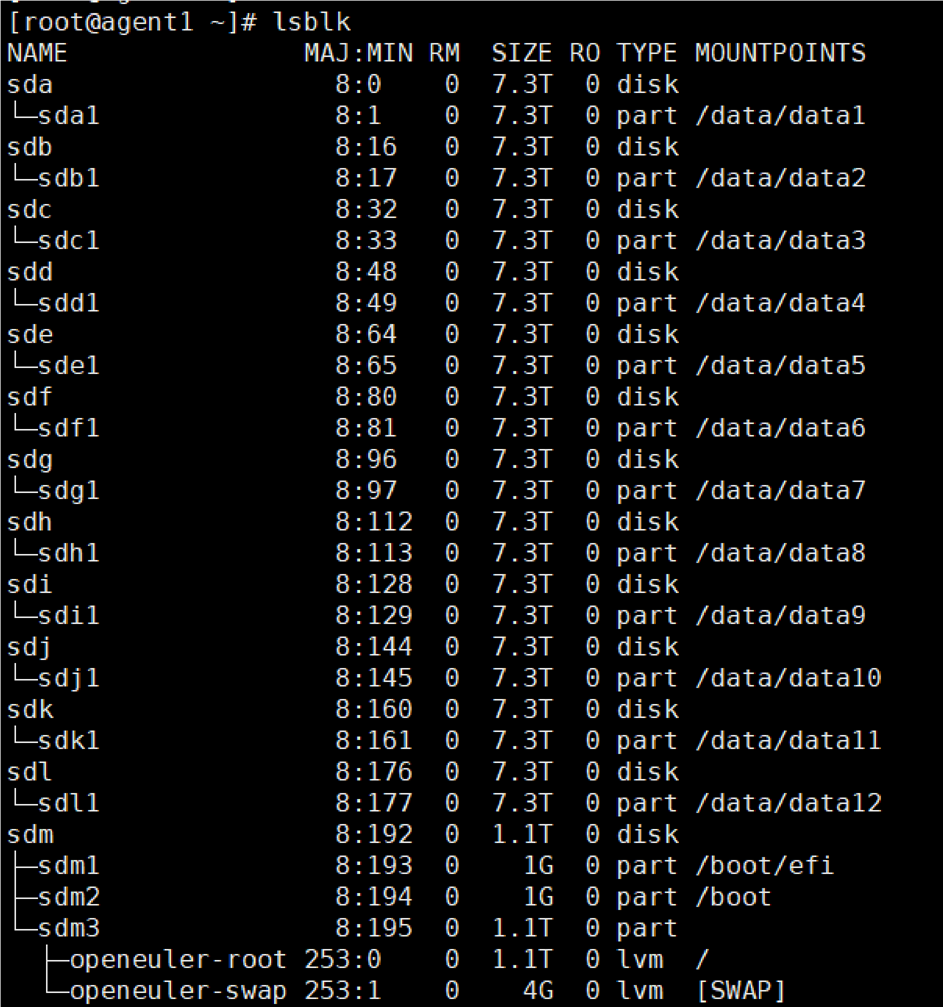

3、查看盘挂载情况。

lsblk

回显信息显示如下,agent1~3都已经把盘挂载了“/data/data1-12”等12个数据目录,后续将利用这12个数据目录存储ClickHouse的数据。

4、修改“/etc/clickhouse-server/config.xml”配置文件(文件是只读文件,需要使用:wq!保存)。

需要配置以下信息:

storage_configuration

<storage_configuration>

<disks>

<bak_disk1> <!-- disk name -->

<path>/data/data1/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk1>

<bak_disk2>

<path>/data/data2/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk2>

<bak_disk3> <!-- disk name -->

<path>/data/data3/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk3>

<bak_disk4>

<path>/data/data4/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk4>

<bak_disk5> <!-- disk name -->

<path>/data/data5/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk5>

<bak_disk6>

<path>/data/data6/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk6>

<bak_disk7> <!-- disk name -->

<path>/data/data7/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk7>

<bak_disk8>

<path>/data/data8/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk8>

<bak_disk9> <!-- disk name -->

<path>/data/data9/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk9>

<bak_disk10> <!-- disk name -->

<path>/data/data10/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk10>

<bak_disk11> <!-- disk name -->

<path>/data/data11/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk11>

<bak_disk12>

<path>/data/data12/clickhouse/data/</path>

<keep_free_space_bytes>10485760</keep_free_space_bytes>

</bak_disk12>

</disks>

<policies>

<default>

<volumes>

<single>

<disk>bak_disk1</disk>

<disk>bak_disk2</disk>

<disk>bak_disk3</disk>

<disk>bak_disk4</disk>

<disk>bak_disk5</disk>

<disk>bak_disk6</disk>

<disk>bak_disk7</disk>

<disk>bak_disk8</disk>

<disk>bak_disk9</disk>

<disk>bak_disk10</disk>

<disk>bak_disk11</disk>

<disk>bak_disk12</disk>

</single>

</volumes>

</default>

</policies>

</storage_configuration>

remote server

<remote_servers>

<mycluster>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>192.168.xx.1</host>

<port>19000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>192.168.xx.2</host>

<port>19000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>192.168.xx.3</host>

<port>19000</port>

</replica>

</shard>

</mycluster>

</remote_servers>

ZooKeeper

<zookeeper>

<node index="1">

<host>192.168.xx.1</host>

<port>2181</port>

</node>

<node index="2">

<host>192.168.xx.2</host>

<port>2181</port>

</node>

<node index="3">

<host>192.168.xx.3</host>

<port>2181</port>

</node>

</zookeeper>

macros,macros信息需要根据每个节点的情况进行适配,和节点规划对应起来。

<macros>

<cluster>mycluster</cluster>

<shard>01</shard>

<replica>01</replica>

</macros>

在agent2和agent3<macros></macros>标签与上面不同,分别填写:

<macros>

<cluster>mycluster</cluster>

<shard>02</shard>

<replica>01</replica>

</macros>

<macros>

<cluster>mycluster</cluster>

<shard>03</shard>

<replica>01</replica> </macros>

5、创建存储元数据和表数据的目录。

mkdir -p /data/data{1,2,3,4,5,6,7,8,9,10,11,12}/clickhouse/data

mkdir /var/lib/clickhouse

“/data/data{1,2,3,4,5,6,7,8,9,10,11,12}/clickhouse/data”用于存储表数据,建表时指定了storage_policy。

“/var/lib/clickhouse”用于存储元数据,建表时不指定storage_policy时存储表数据。

如果提示缺少server.crt、server.key、dhparam.pem等文件,请使用config.xml中<openssl>标签内的两条生成命令生成相应文件。

openssl req -subj "/CN=localhost" -new -newkey rsa:2048 -days 365 -nodes -x509 -keyout /etc/clickhouse-server/server.key -out /etc/clickhouse-server/server.crt openssl dhparam -out /etc/clickhouse-server/dhparam.pem 4096

如果8123端口冲突,则将<listen_host>只保留一个:<listen_host>127.0.0.1</listen_host>。

如果启动过程中发现9000端口有冲突,可以将9000端口的信息全部改成19000端口,换一个不冲突的端口(vim进入后,使用替换命令 :%s/9000/19000/g)。

6、集群验证。

1)在每个节点上启动ClickHouse Server节点。

cllickhouse start

2)进入ClickHouse Client节点。

clickhouse client -h 192.168.58.168 --port 19000 -n -m

如启动不成功或进入不了数据库,可通过以下命令启动。

/usr/bin/clickhouse-server --configfile /etc/clickhouse-server/config.xml --pid-file /var/run/clickhouse-server/clickhouse-server.pid

3)启动后关闭远程连接窗口。重新连接服务器再尝试进入数据库。

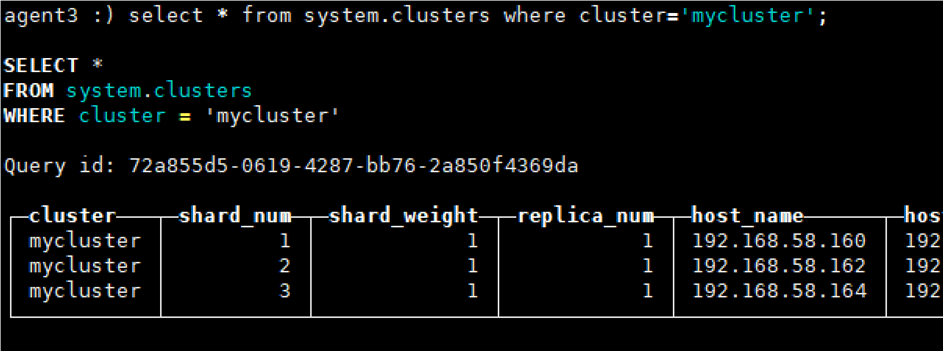

4)查看cluster信息。

select * from system.clusters where cluster='mycluster';

5)建on cluster表。

CREATE TABLE customer on cluster mycluster

(

C_CUSTKEY UInt32,

C_NAME String,

C_ADDRESS String,

C_CITY LowCardinality(String),

C_NATION LowCardinality(String),

C_REGION LowCardinality(String),

C_PHONE String,

C_MKTSEGMENT LowCardinality(String)

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/ default.customer','{replica}') ORDER BY (C_CUSTKEY);

6)建分布式表。

CREATE TABLE default.customer_all on cluster mycluster as default.cusomer

ENGINE = Distributed(mycluster, default, customer, rand());

7)查询分布式表,会利用所有的计算资源。

8)查询本地表,数据只会在同一个shard同步,然后计算资源也是只用到了本机的计算资源。

5 SSB数据集测试

5.1 准备测试数据

使用开源数据集star schema benchmark测试。

1、下载编译dbgen工具,用于生成数据。建议进入挂载在数据盘上的目录执行,在系统盘生成数据比较占用存储空间。

cd / data/data1

yum -y install gcc gcc-c++ make cmake git

git clone https://github.com/vadimtk/ssb-dbgen.git

cd ssb-dbgen

make

2、生成数据。

./dbgen -s 500 -T c

./dbgen -s 500 -T l

./dbgen -s 500 -T p

./dbgen -s 500 -T s

./dbgen -s 500 -T d

3、在ClickHouse中创建数据表。

1)通过ClickHouse Client进入,创建一个database,例如:

clickhouse client -h 192.168.xx.1 --port 19000 -m -n server1 :) create database ssb_data_source;

use ssb_data_source;

2)执行以下建表语句:

CREATE TABLE customer

(

C_CUSTKEY UInt32,

C_NAME String,

C_ADDRESS String,

C_CITY LowCardinality(String),

C_NATION LowCardinality(String), C_REGION LowCardinality(String),

C_PHONE String,

C_MKTSEGMENT LowCardinality(String)

)

ENGINE = MergeTree ORDER BY (C_CUSTKEY) SETTINGS storage_policy = 'default';

CREATE TABLE part

(

P_PARTKEY UInt32,

P_NAME String,

P_MFGR LowCardinality(String),

P_CATEGORY LowCardinality(String),

P_BRAND LowCardinality(String), P_COLOR LowCardinality(String),

P_TYPE LowCardinality(String),

P_SIZE UInt8,

P_CONTAINER LowCardinality(String)

)

ENGINE = MergeTree ORDER BY P_PARTKEY SETTINGS storage_policy = 'default';

CREATE TABLE supplier

(

S_SUPPKEY UInt32,

S_NAME String,

S_ADDRESS String,

S_CITY LowCardinality(String),

S_NATION LowCardinality(String),

S_REGION LowCardinality(String),

S_PHONE String

)

ENGINE = MergeTree ORDER BY S_SUPPKEY

SETTINGS storage_policy = 'default';

4、在“ssb-dbgen”目录下导入数据。

clickhouse client -h 192.168.xx.1 --port 19000 -m -n -d ssb_data_source --query "INSERT INTO customer FORMAT CSV" < customer.tbl

clickhouse client -h 192.168.xx.1 --port 19000 -m -n -d ssb_data_source --query "INSERT INTO part FORMAT CSV" < part.tbl

clickhouse client -h 192.168.xx.1 --port 19000 -m -n -d ssb_data_source --query "INSERT INTO supplier FORMAT CSV" < supplier.tbl

clickhouse client -h 192.168.xx.1 --port 19000 -m -n -d ssb_data_source --query "INSERT INTO lineorder FORMAT CSV" < lineorder.tbl

5、将star schema转成成flat schema,宽表lineorder_flat表有30亿行数据。

在数据库中执行以下命令。

CREATE TABLE lineorder_flat

ENGINE = MergeTree ORDER BY (LO_ORDERDATE, LO_ORDERKEY)

SETTINGS storage_policy = 'default'

AS SELECT

l.LO_ORDERKEY AS LO_ORDERKEY,

l.LO_LINENUMBER AS LO_LINENUMBER,

l.LO_CUSTKEY AS LO_CUSTKEY,

l.LO_PARTKEY AS LO_PARTKEY,

l.LO_SUPPKEY AS LO_SUPPKEY,

l.LO_ORDERDATE AS LO_ORDERDATE,

l.LO_ORDERPRIORITY AS LO_ORDERPRIORITY,

l.LO_SHIPPRIORITY AS LO_SHIPPRIORITY,

l.LO_QUANTITY AS LO_QUANTITY,

l.LO_EXTENDEDPRICE AS LO_EXTENDEDPRICE,

l.LO_ORDTOTALPRICE AS LO_ORDTOTALPRICE,

l.LO_DISCOUNT AS LO_DISCOUNT,

l.LO_REVENUE AS LO_REVENUE,

l.LO_SUPPLYCOST AS LO_SUPPLYCOST,

l.LO_TAX AS LO_TAX,

l.LO_COMMITDATE AS LO_COMMITDATE,

l.LO_SHIPMODE AS LO_SHIPMODE,

c.C_NAME AS C_NAME,

c.C_ADDRESS AS C_ADDRESS,

c.C_CITY AS C_CITY,

c.C_NATION AS C_NATION,

c.C_REGION AS C_REGION,

c.C_PHONE AS C_PHONE,

c.C_MKTSEGMENT AS C_MKTSEGMENT,

s.S_NAME AS S_NAME,

s.S_ADDRESS AS S_ADDRESS,

s.S_CITY AS S_CITY,

s.S_NATION AS S_NATION,

s.S_REGION AS S_REGION,

s.S_PHONE AS S_PHONE,

p.P_NAME AS P_NAME,

p.P_MFGR AS P_MFGR,

p.P_CATEGORY AS P_CATEGORY,

p.P_BRAND AS P_BRAND,

p.P_COLOR AS P_COLOR,

p.P_TYPE AS P_TYPE,

p.P_SIZE AS P_SIZE,

p.P_CONTAINER AS P_CONTAINER

FROM lineorder AS l

INNER JOIN customer AS c ON c.C_CUSTKEY = l.LO_CUSTKEY INNER JOIN supplier AS s ON s.S_SUPPKEY = l.LO_SUPPKEY

INNER JOIN part AS p ON p.P_PARTKEY = l.LO_PARTKEY;

数据库ssb_data_source的lineorder_flat产生了30亿条数据作为数据源,在后续的测试步骤中,需要数据从此表导入即可。

5.2 分布式性能提升测试

5.2.1 构建分布式库表

1、进入ClickHouse交互式界面。

clickhouse client -h 192.168.xx.1 --port 19000 -m -n

如有密码则使用:

clickhouse client -h 192.168.xx.1 -u username --password password -m -n

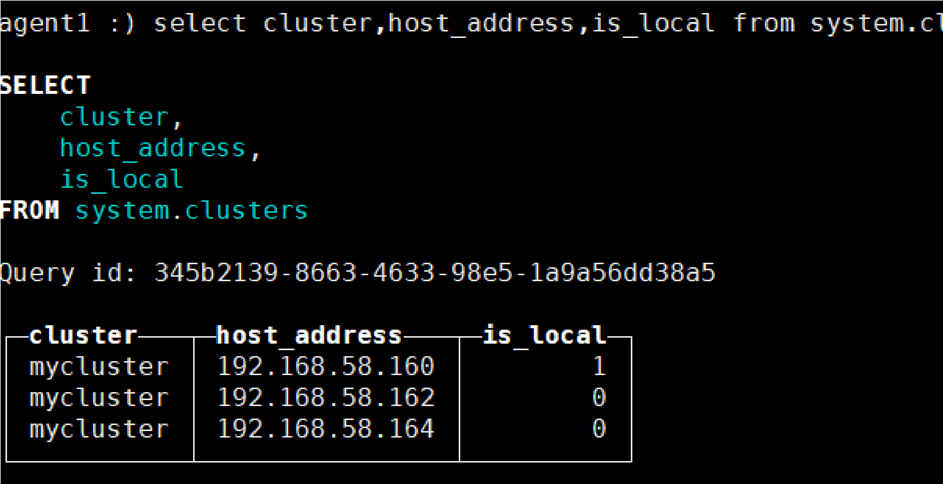

2、查看集群名称。

select cluster,host_address,is_local from system.clusters;

cluster列为集群名称,之后步骤需要多次使用。is_local列为1代表当前所连接节点。

3、在所有节点创建数据库“distributed_test”。mycluster为集群名称,需要替换。

create database distributed_test on cluster mycluster;

4、在所有节点创建本地表“lineorder_flat”。

CREATE TABLE distributed_test.lineorder_flat on cluster mycluster (

`LO_ORDERKEY` UInt32,

`LO_LINENUMBER` UInt8,

`LO_CUSTKEY` UInt32,

`LO_PARTKEY` UInt32,

`LO_SUPPKEY` UInt32,

`LO_ORDERDATE` Date,

`LO_ORDERPRIORITY` LowCardinality(String),

`LO_SHIPPRIORITY` UInt8,

`LO_QUANTITY` UInt8,

`LO_EXTENDEDPRICE` UInt32, `LO_ORDTOTALPRICE` UInt32,

`LO_DISCOUNT` UInt8, `LO_REVENUE` UInt32,

`LO_SUPPLYCOST` UInt32,

`LO_TAX` UInt8,

`LO_COMMITDATE` Date,

`LO_SHIPMODE` LowCardinality(String),

`C_NAME` String,

`C_ADDRESS` String,

`C_CITY` LowCardinality(String),

`C_NATION` LowCardinality(String),

`C_REGION` LowCardinality(String),

`C_PHONE` String,

`C_MKTSEGMENT` LowCardinality(String),

`S_NAME` String,

`S_ADDRESS` String,

`S_CITY` LowCardinality(String),

`S_NATION` LowCardinality(String),

`S_REGION` LowCardinality(String),

`S_PHONE` String,

`P_NAME` String,

`P_MFGR` LowCardinality(String),

`P_CATEGORY` LowCardinality(String),

`P_BRAND` LowCardinality(String),

`P_COLOR` LowCardinality(String),

`P_TYPE` LowCardinality(String),

`P_SIZE` UInt8,

`P_CONTAINER` LowCardinality(String)

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/ finebiUserOper_cluster_23shards_2replicas/{shard}/ distributed.lineorder_flat__sagahgagag', '{replica}')

ORDER BY (LO_ORDERDATE, LO_ORDERKEY)

SETTINGS index_granularity = 8192, use_minimalistic_part_header_in_zookeeper =1, storage_policy = 'default';

5、在所有节点创建分布式表。

CREATE TABLE IF NOT EXISTS distributed_test.lineorder_flat_all ON CLUSTER mycluster AS distributed_test.lineorder_flat

ENGINE = Distributed(mycluster, distributed_test, lineorder_flat, rand());

6、导入数据:从步骤2创建的数据库里向lineorder_flat_all表并导入足量数据。

insert into distributed_test.lineorder_flat_all select * from ssb_data_source.lineorder_flat limit 3000000000;

本次测试导入30亿条数据。

5.2.2 执行OmniMV运行流程

1、清空system.query_log中distributed_test数据库的查询记录。

alter table system.query_log delete where databases=['distributed_test'];

2、执行ssb的sql。

官网上提供了13条SSB数据库的查询SQL。三条没有group by子句,10条有group by子句。需要将其改写为查询分布式表lineorder_flat_all,改写后SQL如下:

SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue FROM lineorder_flat_all WHERE toYear(LO_ORDERDATE) = 1993 AND LO_DISCOUNT BETWEEN 1 AND 3 AND LO_QUANTITY < 25 limit 100;

SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue FROM lineorder_flat_all WHERE toYYYYMM(LO_ORDERDATE) = 199401 AND LO_DISCOUNT BETWEEN 4 AND 6 AND LO_QUANTITY BETWEEN 26 AND 35;

SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue FROM lineorder_flat_all WHERE toISOWeek(LO_ORDERDATE) = 6 AND toYear(LO_ORDERDATE) = 1994 AND LO_DISCOUNT BETWEEN 5 AND 7 AND LO_QUANTITY BETWEEN 26 AND 35;

SELECT sum(LO_REVENUE), toYear(LO_ORDERDATE) AS year, P_BRAND FROM lineorder_flat_all WHERE P_CATEGORY = 'MFGR#12' AND S_REGION = 'AMERICA' GROUP BY year, P_BRAND ORDER BY year, P_BRAND;

SELECT sum(LO_REVENUE), toYear(LO_ORDERDATE) AS year, P_BRAND FROM lineorder_flat_all WHERE P_BRAND >= 'MFGR#2221' AND P_BRAND <= 'MFGR#2228' AND S_REGION = 'ASIA' GROUP BY year, P_BRAND ORDER BY year, P_BRAND;

SELECT sum(LO_REVENUE), toYear(LO_ORDERDATE) AS year, P_BRAND FROM lineorder_flat_all WHERE P_BRAND = 'MFGR#2239' AND S_REGION = 'EUROPE' GROUP BY year, P_BRAND ORDER BY year, P_BRAND;

SELECT C_NATION, S_NATION, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat_all WHERE C_REGION = 'ASIA' AND S_REGION = 'ASIA' AND year >= 1992 AND year <= 1997 GROUP BY C_NATION, S_NATION,year ORDER BY year ASC,revenue DESC;

SELECT C_CITY, S_CITY, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat_all WHERE C_NATION = 'UNITED STATES' AND S_NATION = 'UNITED STATES' AND year >= 1992 AND year <= 1997 GROUP BY C_CITY, S_CITY, year ORDER BY year ASC,revenue DESC;

SELECT C_CITY, S_CITY, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat_all WHERE (C_CITY = 'UNITED KI1' OR C_CITY = 'UNITED KI5') AND (S_CITY = 'UNITED KI1' OR S_CITY = 'UNITED KI5') AND year >= 1992 AND year <= 1997 GROUP BY C_CITY, S_CITY, year ORDER BY year ASC, revenue DESC;

SELECT C_CITY, S_CITY, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat_all WHERE (C_CITY = 'UNITED KI1' OR C_CITY = 'UNITED KI5') AND (S_CITY = 'UNITED KI1' OR S_CITY = 'UNITED KI5') AND toYYYYMM(LO_ORDERDATE) = 199712 GROUP BY C_CITY, S_CITY, year ORDER BY year ASC,revenue DESC;

SELECT toYear(LO_ORDERDATE) AS year, C_NATION, sum(LO_REVENUE - LO_SUPPLYCOST) AS profit FROM lineorder_flat_all WHERE C_REGION = 'AMERICA' AND S_REGION = 'AMERICA' AND (P_MFGR = 'MFGR#1' OR P_MFGR = 'MFGR#2') GROUP BY year, C_NATION ORDER BY year ASC, C_NATION ASC;

SELECT toYear(LO_ORDERDATE) AS year, S_NATION, P_CATEGORY, sum(LO_REVENUE - LO_SUPPLYCOST) AS profit FROM lineorder_flat_all WHERE C_REGION = 'AMERICA' AND S_REGION = 'AMERICA' AND (year = 1997 OR year = 1998) AND (P_MFGR = 'MFGR#1' OR P_MFGR = 'MFGR#2') GROUP BY year, S_NATION,P_CATEGORY ORDER BY year ASC, S_NATION ASC, P_CATEGORY ASC;

SELECT toYear(LO_ORDERDATE) AS year, S_CITY, P_BRAND, sum(LO_REVENUE - LO_SUPPLYCOST) AS profit FROM lineorder_flat_all WHERE S_NATION = 'UNITED STATES' AND (year = 1997 OR year = 1998) AND P_CATEGORY = 'MFGR#14' GROUP BY year, S_CITY, P_BRAND ORDER BY year ASC, S_CITY ASC, P_BRAND ASC;

3、配置OmniMV配置文件,参考配置如下。

[raw_file]

# 数据库名称

database = distributed_test work_path = ./clickhouse

[clickhouse]

ck_host = 192.168.58.168 ck_port = 19000

# 集群名称(只查询本地表可不配置) cluster_name = mycluster num_round = 5

# 是否启用clickhouse projection功能[True or False] enable_projection = False

# 创建和删除projection的超时时间,单位(s)

projection_wait_time = 3600

# 数据压缩比: 视图产生的数据行数/sql查询需要处理的行数read_rows,数据压缩比越小越好 projection_compress_ratio = 0.8

# SQL运行的阈值,小于这个时间的sql不考虑推荐视图,单位(ms) sql_running_time_threshold = 10

# 每张表推荐出来的projection数量的上限

projection_limit_each_table = 10

# 推荐Projection时配置表工作模式,为True,则【clickhouse_tables】只能配置分布式表,为False,则

【clickhouse_tables】只能配置本地表 is_distributed_table = True

# 列举一些重点的表来进行推荐,表之间用逗号分隔。在多表场景下,需要填写all, e.g. clickhouse_tables = all clickhouse_tables = lineorder_flat_all

# 指定从哪个系统表中查询query相关信息,一般本地表会从system.query_log中查询信息,分布式表可能从别的表中获取信息

query_log_table = system.query_log

# (初次提取query)从query_log中对每张表提取query信息,提取到的query需要是指定数据库,指定数据表,运行时间超过配置文件中阈值的query数量

# (增量提取query),增量提取的query数量也是该参数指定

query_limit_each_table = 15

# 在多表场景下,配置提取的query总数量

query_limit_mutil_table = 15

# 是否为包含条件的聚合函数sql推荐projection (例如select sum(multiif..)) [True or False]

# 如果为True:则去除条件表达式后推荐,为False:这条sql不推荐projection projection_with_conditional_functions = False

# 是否需要对新建的projection物化历史的数据,默认不物化历史数据 [True or False] allows_materialize_projection = True

# 指定要删除的Projection名称,使用 "table_name.projection_name"进行指定,table_name必须是本地表表名。多个projection之间使用(英文)逗号分割。

# 如果所有节点都存在此"table_name.projection_name",且都需要删除,需要将【is_distributed_table】配置为True

# 如果不需要删除Projection,请将其置为None,以防止误操作 drop_projections = None

[train]

# 通过对出现过的聚合列进行排列组合,以此来扩大训练数据的数量,这里可以限制 大的扩充的训练sql的数量 max_data_count = 20 epochs = 100 estimators = 50 max_depth = 5 learning_rate = 0.05

[recommend]

# recommend strategy

# options: ['sql', 'compress_ratio']

# 选择compress_ratio表示按照projection压缩比来排序,选择sql,表示按照projection可以匹配sql的数量来排序 candidate_strategy = compress_ratio

4、执行数据库初始化命令。

python main.pyc clickhouse init_database

5、执行提取SQL命令。

python main.pyc clickhouse extract_sqls

虽然配置了每张表提取15条SQL,但清空执行记录后只执行了13条SQL,因此只提取到了13条SQL。

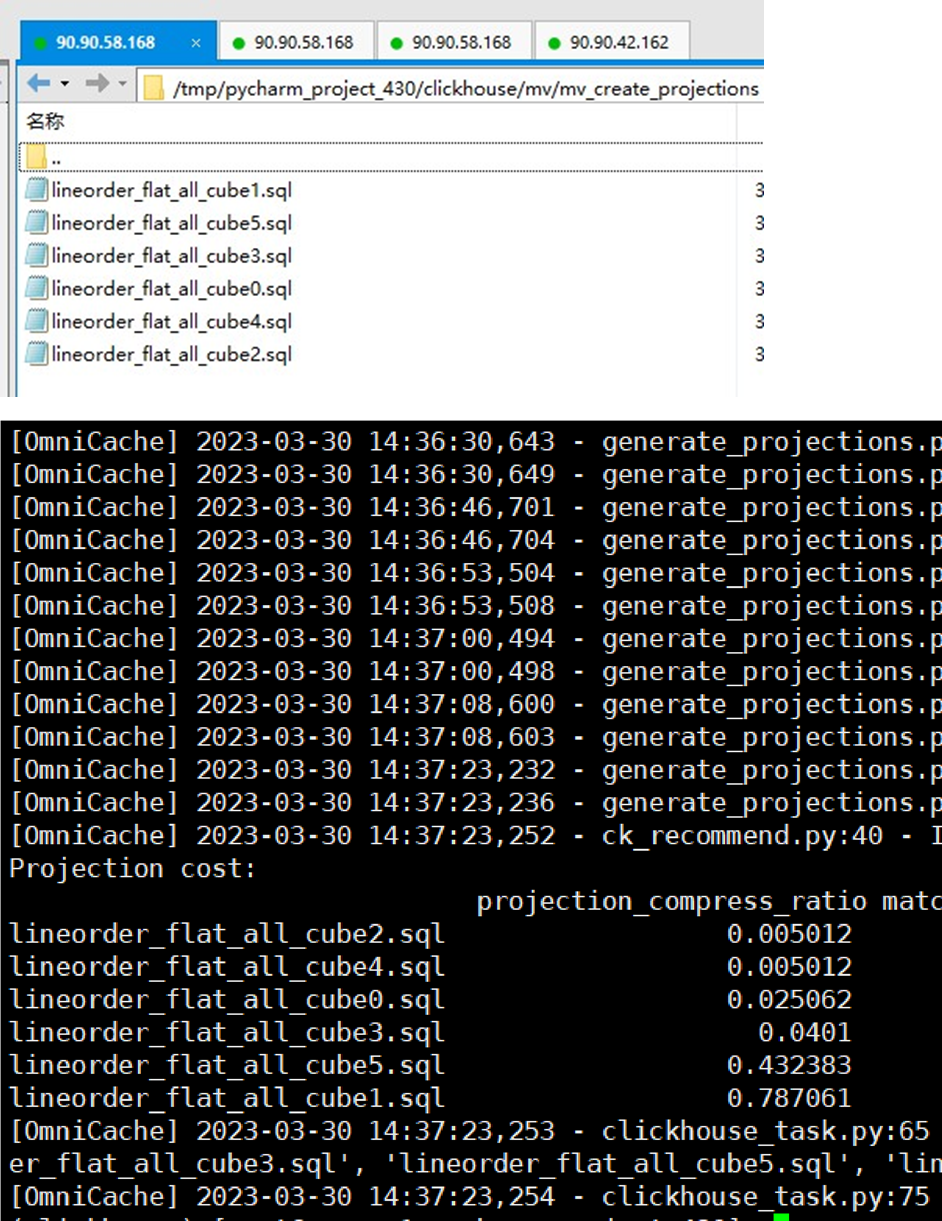

6、执行解析projection命令。

python main.pyc clickhouse generate_views

产生6条projections。

7、创建projections。

python main.pyc clickhouse create_projections

在各个节点的本地表lineorder_flat上均创建了视图。

8、关闭projection,测试13条SQL运行时间。

1)将以下内容写入measure_performance.sh脚本中。在使用时,需要将脚本中的IP地址替换成实际的。

#!/bin/bash

# 获取要执行的SQL语句目录

sql_dir=$1 set_allow=$2

# 判断目录是否存在

if [ ! -d $sql_dir ]; then

echo "The specified directory does not exist." exit 1

fi

# 定义clickhouse命令行工具路径

clickhouse_client="/usr/bin/clickhouse-client -h 192.168.xx.1 --port 19000 -m -n -d distributed_test -allow_experimental_projection_optimization $set_allow"

# 获取目录下所有的.sql文件

sql_files=$(ls $sql_dir/*.sql)

# 循环执行每个SQL文件

for sql_file in $sql_files do

# 清理所有节点服务器缓存

ssh agent1 "echo 3 > /proc/sys/vm/drop_caches" ssh agent2 "echo 3 > /proc/sys/vm/drop_caches" ssh agent3 "echo 3 > /proc/sys/vm/drop_caches" echo "Executing SQL file: $sql_file"

start=$(date +%s.%N) # 记录开始时间 # 使用clickhouse命令执行SQL文件, 执行5次 for i in {1..5} do

echo "执行:$sql_file"

$clickhouse_client < $sql_file >> /dev/null done

end=$(date +%s.%N) # 记录结束时间

avg_time=`awk -v x=$end -v y=$start 'BEGIN{printf "%.4f\n",(x-y)/5}'` echo "$sql_file五次平均执行时间为:$avg_time 秒" done

echo "All SQL files executed."

2)执行脚本。

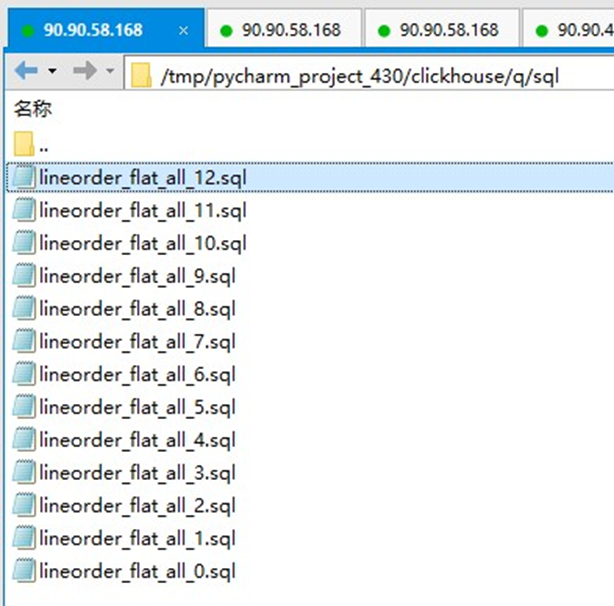

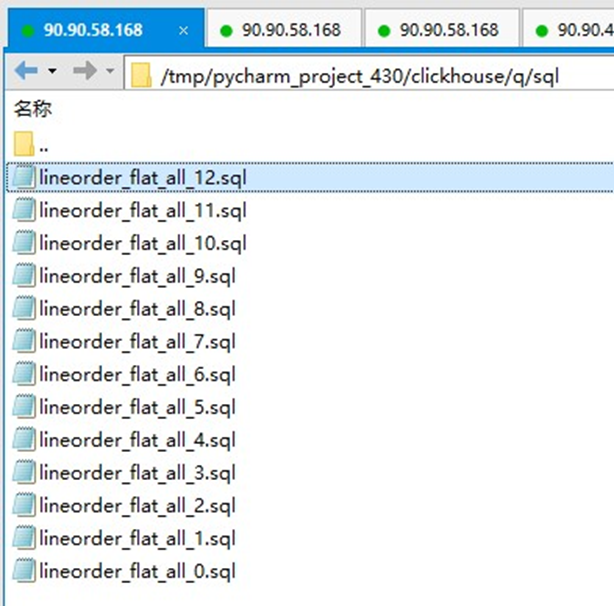

sh measure_performance.sh /tmp/pycharm_project_430/clickhouse 0

“/tmp/pycharm_project_430/clickhouse”参数为SQL文件夹路径,第二个参数表示是否启用projection,“0”表示禁用,“1”表示启用。SQL文件夹路径在配置文件中的work_path下的“/q/sql”中。

执行脚本输出为:

lineorder_flat_all_0.sql五次平均执行时间为:0.4203 秒

lineorder_flat_all_10.sql五次平均执行时间为:20.9677 秒

lineorder_flat_all_11.sql五次平均执行时间为:7.4551 秒

lineorder_flat_all_12.sql五次平均执行时间为:7.0748 秒

lineorder_flat_all_1.sql五次平均执行时间为:13.5604 秒

lineorder_flat_all_2.sql五次平均执行时间为:17.4962 秒

lineorder_flat_all_3.sql五次平均执行时间为:16.0823 秒

lineorder_flat_all_4.sql五次平均执行时间为:0.2616 秒

lineorder_flat_all_5.sql五次平均执行时间为:0.3689 秒

lineorder_flat_all_6.sql五次平均执行时间为:2.5517 秒

lineorder_flat_all_7.sql五次平均执行时间为:13.1876 秒

lineorder_flat_all_8.sql五次平均执行时间为:13.3727 秒

lineorder_flat_all_9.sql五次平均执行时间为:15.5257 秒

3)开启projection,测试13条SQL运行时间。

sh measure_performance.sh /tmp/pycharm_project_430/clickhouse 1

脚本输出为:

lineorder_flat_all_0.sql五次平均执行时间为:0.1874 秒

lineorder_flat_all_10.sql五次平均执行时间为:0.2092 秒

lineorder_flat_all_11.sql五次平均执行时间为:8.1524 秒

lineorder_flat_all_12.sql五次平均执行时间为:1.0646 秒

lineorder_flat_all_1.sql五次平均执行时间为:2.3531 秒

lineorder_flat_all_2.sql五次平均执行时间为:6.4758 秒

lineorder_flat_all_3.sql五次平均执行时间为:2.4112 秒

lineorder_flat_all_4.sql五次平均执行时间为:0.2446 秒

lineorder_flat_all_5.sql五次平均执行时间为:0.3683 秒

lineorder_flat_all_6.sql五次平均执行时间为:2.5895 秒

lineorder_flat_all_7.sql五次平均执行时间为:0.1433 秒

lineorder_flat_all_8.sql五次平均执行时间为:0.1555 秒

lineorder_flat_all_9.sql五次平均执行时间为:0.7408 秒

5.2.3 数据分析

|

SQL |

无projection执行时间(秒) |

启用projection执行时间(秒) |

启用Projection,匹配视图号 |

性能提升 |

|

lineorder_flat_al l_0.sql |

0.4203 |

0.1874 |

lineorder_flat_a ll_cube5 |

124.27 96% |

|

lineorder_flat_al l_10.sql |

20.9677 |

0.2092 |

lineorder_flat_a ll_cube2 |

9922.8 011% |

|

lineorder_flat_al l_11.sql |

7.4551 |

8.1524 | - |

-8.5533 % |

|

lineorder_flat_al l_12.sql |

7.0748 |

1.0646 |

lineorder_flat_a ll_cube0 |

564.55 01% |

|

lineorder_flat_al l_1.sql |

13.5604 |

2.3531 |

lineorder_flat_a ll_cube5 |

476.27 81% |

|

lineorder_flat_al l_2.sql |

17.4962 |

6.4758 |

lineorder_flat_a ll_cube5 |

170.17 82% |

|

lineorder_flat_al l_3.sql |

16.0823 |

2.4112 |

lineorder_flat_a ll_cube4 |

566.98 32% |

|

lineorder_flat_al l_4.sql |

0.2616 |

0.2446 | - |

6.9501 % |

|

lineorder_flat_al l_5.sql |

0.3689 |

0.3683 | - |

0.1629 % |

|

lineorder_flat_al l_6.sql |

2.5517 |

2.5895 | - |

-1.4597 % |

|

lineorder_flat_al l_7.sql |

13.1876 |

0.1433 |

lineorder_flat_a ll_cube3 |

9102.7 913% |

|

lineorder_flat_al l_8.sql |

13.3727 |

0.1555 |

lineorder_flat_a ll_cube3 |

8499.8 071% |

|

lineorder_flat_al l_9.sql |

15.5257 |

0.7408 |

lineorder_flat_a ll_cube3 |

1995.8 018% |

|

总计 |

128.325 |

25.0957 | - |

411.34 26% |

-

lineorder_flat_all_4.sql、lineorder_flat_all_5.sql、lineorder_flat_all_6.sql三条SQL没有group by子句,未推荐projection。

-

lineorder_flat_all_11.sql未查询超过一半的part,ClickHouse不为其匹配projection。

OmnicCache推荐的视图顺序:排在前面优先级高

["lineorder_flat_all_cube2.sql", "lineorder_flat_all_cube4.sql",

"lineorder_flat_all_cube0.sql", "lineorder_flat_all_cube3.sql",

"lineorder_flat_all_cube5.sql", "lineorder_flat_all_cube1.sql"]

视图额外存储空间:

|

视图号 |

server1存储空间(MiB) |

agent1存储空间(MiB) |

agent2存储空间(MiB) |

总计 |

占比 |

|

lineorder_flat_ all_cube0 |

151.09 |

135.17 |

148.42 |

434.68 |

0.1 3% |

|

lineorder_flat_ all_cube1 |

3921.92 |

3819.52 |

3911.68 |

11653. 12 |

3.4 2% |

|

lineorder_flat_ all_cube2 |

32.76 |

28.47 |

31.95 |

93.18 |

0.0 3% |

|

lineorder_flat_ all_cube3 |

229.84 |

206.72 |

226.25 |

662.81 |

0.1 9% |

|

lineorder_flat_ all_cube4 |

33.04 |

28.75 |

32.22 |

94.01 |

0.0 3% |

|

lineorder_flat_ all_cube5 |

1761.28 |

1638.4 |

1740.8 |

5140.4 8 |

1.5 1% |

5.3 单机性能提升测试

1、将以下SQL语句(查询本地表lineorder_flat)分别写入.sql文件中,并放置于“/tmp/sqls”目录下。

SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue FROM lineorder_flat WHERE toYear(LO_ORDERDATE) = 1993 AND LO_DISCOUNT BETWEEN 1 AND 3 AND LO_QUANTITY < 25 limit

100;--q1

SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue FROM lineorder_flat WHERE toYYYYMM(LO_ORDERDATE) = 199401 AND LO_DISCOUNT BETWEEN 4 AND 6 AND LO_QUANTITY BETWEEN 26 AND 35;--q2

SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue FROM lineorder_flat WHERE toISOWeek(LO_ORDERDATE) = 6 AND toYear(LO_ORDERDATE) = 1994 AND LO_DISCOUNT BETWEEN 5 AND 7 AND LO_QUANTITY BETWEEN 26 AND 35;--q3

SELECT sum(LO_REVENUE), toYear(LO_ORDERDATE) AS year, P_BRAND FROM lineorder_flat WHERE P_CATEGORY = 'MFGR#12' AND S_REGION = 'AMERICA' GROUP BY year, P_BRAND ORDER BY year,

P_BRAND;--q4

SELECT sum(LO_REVENUE), toYear(LO_ORDERDATE) AS year, P_BRAND FROM lineorder_flat WHERE P_BRAND >= 'MFGR#2221' AND P_BRAND <= 'MFGR#2228' AND S_REGION = 'ASIA' GROUP BY year, P_BRAND ORDER BY year, P_BRAND;--q5

SELECT sum(LO_REVENUE), toYear(LO_ORDERDATE) AS year, P_BRAND FROM lineorder_flat WHERE P_BRAND = 'MFGR#2239' AND S_REGION = 'EUROPE' GROUP BY year, P_BRAND ORDER BY year,

P_BRAND;--q6

SELECT C_NATION, S_NATION, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat WHERE C_REGION = 'ASIA' AND S_REGION = 'ASIA' AND year >= 1992 AND year <= 1997 GROUP BY C_NATION, S_NATION,year ORDER BY year ASC,revenue DESC;--q7

SELECT C_CITY, S_CITY, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat WHERE C_NATION = 'UNITED STATES' AND S_NATION = 'UNITED STATES' AND year >= 1992 AND year <= 1997 GROUP BY C_CITY, S_CITY, year ORDER BY year ASC,revenue DESC;--q8

SELECT C_CITY, S_CITY, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat WHERE (C_CITY = 'UNITED KI1' OR C_CITY = 'UNITED KI5') AND (S_CITY = 'UNITED KI1' OR S_CITY = 'UNITED KI5') AND year >= 1992 AND year <= 1997 GROUP BY C_CITY, S_CITY, year ORDER BY year ASC, revenue DESC;--q9

SELECT C_CITY, S_CITY, toYear(LO_ORDERDATE) AS year, sum(LO_REVENUE) AS revenue FROM lineorder_flat WHERE (C_CITY = 'UNITED KI1' OR C_CITY = 'UNITED KI5') AND (S_CITY = 'UNITED KI1' OR S_CITY = 'UNITED KI5') AND toYYYYMM(LO_ORDERDATE) = 199712 GROUP BY C_CITY, S_CITY, year ORDER BY year ASC,revenue DESC;--q10

SELECT toYear(LO_ORDERDATE) AS year, C_NATION, sum(LO_REVENUE - LO_SUPPLYCOST) AS profit FROM lineorder_flat WHERE C_REGION = 'AMERICA' AND S_REGION = 'AMERICA' AND (P_MFGR =

'MFGR#1' OR P_MFGR = 'MFGR#2') GROUP BY year, C_NATION ORDER BY year ASC, C_NATION ASC;--q11

SELECT toYear(LO_ORDERDATE) AS year, S_NATION, P_CATEGORY, sum(LO_REVENUE - LO_SUPPLYCOST) AS profit FROM lineorder_flat WHERE C_REGION = 'AMERICA' AND S_REGION = 'AMERICA' AND (year = 1997 OR year = 1998) AND (P_MFGR = 'MFGR#1' OR P_MFGR = 'MFGR#2') GROUP BY year, S_NATION,P_CATEGORY ORDER BY year ASC, S_NATION ASC, P_CATEGORY ASC;--q12

SELECT toYear(LO_ORDERDATE) AS year, S_CITY, P_BRAND, sum(LO_REVENUE - LO_SUPPLYCOST) AS profit FROM lineorder_flat WHERE S_NATION = 'UNITED STATES' AND (year = 1997 OR year = 1998) AND P_CATEGORY = 'MFGR#14' GROUP BY year, S_CITY, P_BRAND ORDER BY year ASC, S_CITY ASC, P_BRAND ASC;--q13

如下图所示:

因为分布式性能测试中的每个节点的distributed_test.lineorder_flat都有10亿行数据,且构建了projection。这里直接使用一台节点进行单机性能提升测试。

2、测试关闭projection的执行时间。

sh measure_performance.sh /tmp/sqls 0

脚本输出为:

/tmp/sqls/lineorder_flat_10.sql五次平均执行时间为:0.4130 秒

/tmp/sqls/lineorder_flat_11.sql五次平均执行时间为:21.5681 秒

/tmp/sqls/lineorder_flat_12.sql五次平均执行时间为:6.8846 秒

/tmp/sqls/lineorder_flat_13.sql五次平均执行时间为:7.2954 秒

/tmp/sqls/lineorder_flat_1.sql五次平均执行时间为:2.4973 秒

/tmp/sqls/lineorder_flat_2.sql五次平均执行时间为:0.3634 秒

/tmp/sqls/lineorder_flat_3.sql五次平均执行时间为:0.1863 秒

/tmp/sqls/lineorder_flat_4.sql五次平均执行时间为:15.3781 秒

/tmp/sqls/lineorder_flat_5.sql五次平均执行时间为:13.3549 秒

/tmp/sqls/lineorder_flat_6.sql五次平均执行时间为:13.7589 秒

/tmp/sqls/lineorder_flat_7.sql五次平均执行时间为:15.3541 秒

/tmp/sqls/lineorder_flat_8.sql五次平均执行时间为:16.7528 秒

/tmp/sqls/lineorder_flat_9.sql五次平均执行时间为:12.5798 秒

3、测试开启projection的执行时间。

sh measure_performance.sh /tmp/sqls 1

脚本输出为:

/tmp/sqls/lineorder_flat_10.sql五次平均执行时间为:0.1653 秒

/tmp/sqls/lineorder_flat_11.sql五次平均执行时间为:0.1974 秒

/tmp/sqls/lineorder_flat_12.sql五次平均执行时间为:0.7566 秒

/tmp/sqls/lineorder_flat_13.sql五次平均执行时间为:7.6826 秒

/tmp/sqls/lineorder_flat_1.sql五次平均执行时间为:2.4996 秒

/tmp/sqls/lineorder_flat_2.sql五次平均执行时间为:0.3270 秒

/tmp/sqls/lineorder_flat_3.sql五次平均执行时间为:0.1747 秒

/tmp/sqls/lineorder_flat_4.sql五次平均执行时间为:0.6892 秒

/tmp/sqls/lineorder_flat_5.sql五次平均执行时间为:0.1150 秒

/tmp/sqls/lineorder_flat_6.sql五次平均执行时间为:0.1025 秒

/tmp/sqls/lineorder_flat_7.sql五次平均执行时间为:1.1884 秒

/tmp/sqls/lineorder_flat_8.sql五次平均执行时间为:5.1712 秒

/tmp/sqls/lineorder_flat_9.sql五次平均执行时间为:1.2678 秒

数据分析:

|

SQL |

无projection执行时间(秒) |

启用projection执行时间(秒) |

启用Projection,匹配视图号 |

性能提升 |

|

lineorder_flat_1.sql |

2.4973 |

2.4996 | - |

-0.0920 % |

|

lineorder_flat_2.sql |

0.3634 |

0.327 | - |

11.131 5% |

|

lineorder_flat_3.sql |

0.1863 |

0.1747 | - |

6.6400 % |

|

lineorder_flat_4.sql |

15.3781 |

0.6892 |

lineorder_flat_a ll_cube3 |

2131.2 972% |

|

lineorder_flat_5.sql |

13.3549 |

0.115 |

lineorder_flat_a ll_cube3 |

11512. 9565% |

|

lineorder_flat_6.sql |

13.7589 |

0.1025 |

lineorder_flat_a ll_cube3 |

13323. 3171% |

|

lineorder_flat_7.sql |

15.3541 |

1.1884 |

lineorder_flat_a ll_cube4 |

1191.9 976% |

|

lineorder_flat_8.sql |

16.7528 |

5.1712 |

lineorder_flat_a ll_cube5 |

223.96 35% |

|

lineorder_flat_9.sql |

12.5798 |

1.2678 |

lineorder_flat_a ll_cube5 |

892.25 43% |

|

lineorder_flat_10.sql |

0.413 |

0.1653 |

lineorder_flat_a ll_cube5 |

149.84 88% |

|

lineorder_flat_11.sql |

21.5681 |

0.1974 |

lineorder_flat_a ll_cube2 |

10826. 0892% |

|

lineorder_flat_12.sql |

6.8846 |

0.7566 |

lineorder_flat_a ll_cube0 |

809.93 92% |

|

lineorder_flat_13.sql |

7.2954 |

7.6826 | - |

-5.0400 % |

|

总计 |

126.3867 |

20.3373 | - |

521.45 27% |

lineorder_flat_1.sql、lineorder_flat_2.sql、lineorder_flat_3.sql三条SQL没有group by子句,未推荐projection。lineorder_flat_13.sql未查询超过一半的part,ClickHouse不为其匹配projection。

6 CBG数据集测试

6.1 准备测试数据

请使用以下脚本生成30亿行数据,其中变量w控制生成行数,单位为万行。

#!/usr/bin/env python

# -*- coding: utf-8 -*

import random

import time

import csv

import io

from multiprocessing import Process

def writelog(fileName,count,num):

tuparr=[]

while count < num:

with io.open(fileName,mode='a+') as f:

dateTime = time.strftime('%Y-%m-%d',time.localtime(random.randint(1635523200, 1635609600)))

appNameNew = []

appNameNew.append('维修受理完成时间'+str(random.randint(0,100)))

appNameNew.append('激活日期'+str(random.randint(0,1000)))

appNameNew.append('上市月份'+str(random.randint(0,1000)))

appNameNew.append('设备品牌'+str(random.randint(0,100)))

appNameNew.append('产品线'+str(random.randint(0,100)))

appNameNew.append('产品线设备系列名称'+str(random.randint(0,100)))

appNameNew.append('产品线new'+str(random.randint(0,100)))

appNameNew.append('SPDT'+str(random.randint(0,100000)))

appNameNew.append('标准设备系列名称'+str(random.randint(0,1000)))

appNameNew.append(random.choice(['HUAWEI MATE 10','HUAWEI MATE 10 PRO','HUAWEI MATE 10 保时捷设计','HUAWEI MATE 20','HUAWEI MATE 20 PRO','HUAWEI MATE 20 PRO (UD','HUAWEI MATE 20 RS 保时捷设计','HUAWEI MATE 20 X','HUAWEI MATE 20 X (5G','HUAWEI MATE 30','HUAWEI MATE 30 5G','HUAWEI MATE 30 PRO','HUAWEI MATE 30 PRO 5G','HUAWEI MATE 30 RS 保时捷设计','HUAWEI MATE 40','HUAWEI MATE 40 PRO','HUAWEI MATE 40 PRO+','HUAWEI MATE 40 PRO 4G','HUAWEI MATE 40 RS 保时捷设计','HUAWEI MATE 40 RS 保时捷设计 典藏版','HUAWEI NOVA 4','HUAWEI NOVA 4E','HUAWEI NOVA 5','HUAWEI NOVA 5I','HUAWEI NOVA 5I PRO','HUAWEI NOVA 5 PRO','HUAWEI NOVA 5Z','HUAWEI NOVA 6','HUAWEI NOVA 6 (5G','HUAWEI NOVA 6 SE','HUAWEI NOVA 7 5G','HUAWEI NOVA 7 PRO 5G','HUAWEI NOVA 7 PRO 5G 活力版','HUAWEI NOVA 7 SE 5G','HUAWEI NOVA 7 SE 5G 乐活版','HUAWEI NOVA 7 SE 5G 活力版','HUAWEI NOVA 8','HUAWEI NOVA 8 PRO','HUAWEI NOVA 8 PRO 4G','HUAWEI NOVA 8 SE','HUAWEI NOVA 8 SE 活力版','HUAWEI NOVA 9','HUAWEI NOVA 9 PRO','HUAWEI P20','HUAWEI P20 PRO','HUAWEI P30','HUAWEI P30 PRO','HUAWEI P40 4G','HUAWEI P40 5G','HUAWEI P40 PRO','HUAWEI P40 PRO+','HUAWEI P50','HUAWEI P50 PRO 4G']))

appNameNew.append('设备EMUI版本'+str(random.randint(0,100)))

appNameNew.append('设备价格范围'+str(random.randint(0,100)))

appNameNew.append(random.choice(["32GB","64GB","128GB","256GB"]))

appNameNew.append(random.choice(["32GB","64GB","128GB","256GB"]))

appNameNew.append(random.choice(["红","绿","蓝","黑"]))

appNameNew.append('屏幕尺寸'+str(random.randint(0,10)))

appNameNew.append('分辨率'+str(random.randint(0,10)))

appNameNew.append('海外机型标志'+str(random.randint(0,100)))

appNameNew.append('老旧机型标志'+str(random.randint(0,100)))

appNameNew.append(random.choice(["男","女"]))

appNameNew.append('年龄范围'+str(random.randint(0,100)))

appNameNew.append('职业'+str(random.randint(0,1000)))

appNameNew.append('常驻省份'+str(random.randint(0,100)))

appNameNew.append('常驻城市'+str(random.randint(0,1000)))

appNameNew.append('常驻城市dengji'+str(random.randint(0,1000)))

appNameNew.append(random.choice(["1个月","2个月","3个月","4个月","5个月","6个月","7个月","8个月","9个月","10个月","11个月","12个月","13个月","14个月","15个月","16个月","17个月","18个月","19个月","20 个月","21个月","22个月","23个月","24个月","25个月","26个月","27个月","28个月","29个月","30个月","31个月","32个月","33个月","34个月","35个月","36个月","37个月","38个月","39个月","40个月","41个月","42个月","43个月","44个月","45个月","46个月","47个月","48个月"]))

appNameNew.append(random.choice(["1个月","2个月","3个月","4个月","5个月","6个月","7个月","8个月","9个月","10个月","11个月","12个月","13个月","14个月","15个月","16个月","17个月","18个月","19个月","20 个月","21个月","22个月","23个月","24个月","25个月","26个月","27个月","28个月","29个月","30个月","31个月","32个月","33个月","34个月","35个月","36个月","37个月","38个月","39个月","40个月","41个月","42个月","43个月","44个月","45个月","46个月","47个月","48个月"]))

appNameNew.append('剩余RAM分段'+str(random.randint(0,50)))

appNameNew.append('剩余ROM分段'+str(random.randint(0,50)))

appNameNew.append('卡顿程度'+str(random.randint(0,10)))

appNameNew.append('容量'+str(random.randint(100,120)))

appNameNew.append('衰减表只'+str(random.randint(0,3)))

appNameNew.append('老化标志'+str(random.randint(0,10)))

appNameNew.append('充电圈数区间'+str(random.randint(50,70)))

appNameNew.append('电池损耗区间'+str(random.randint(50,70)))

appNameNew.append('故障维修次数'+str(random.randint(0,10)))

appNameNew.append('故障维修方法'+str(random.randint(0,10)))

appNameNew.append('服务'+str(random.randint(1,10)))

appNameNew.append('插卡'+str(random.randint(1,10)))

appNameNew.append('开通'+str(random.randint(0,100)))

appNameNew.append(random.randint(0,1000))

appNameNew.append(random.randint(0,1000))

appNameNew.append(random.randint(0,1000))

appNameNew.append(random.randint(0,1000))

appNameNew.append(random.randint(0,1000))

appNameNew.append('年龄来源'+str(random.randint(0,100)))

appNameNew.append('性别来源'+str(random.randint(0,100)))

appNameNew.append('高可信度标志'+str(random.randint(0,100)))

appNameNew.append(dateTime)

tuparr.append(appNameNew)

#print(tuparr)

if(count%10000==0):

writeData = csv.writer(f)

writeData.writerows(tuparr)

tuparr=[]

count+=1

Processlist =[]

w = 300000

num = (2000*w) + 10

s = 0

t1 = Process(target=writelog, args=['dws1.txt', s + 1, s + num])

t2 = Process(target=writelog, args=['dws2.txt', s + 1, s + num])

t3 = Process(target=writelog, args=['dws3.txt', s + 1, s + num])

t4 = Process(target=writelog, args=['dws4.txt', s + 1, s + num])

t5 = Process(target=writelog, args=['dws5.txt', s + 1, s + num])

Processlist.append(t1)

Processlist.append(t2)

Processlist.append(t3)

Processlist.append(t4)

Processlist.append(t5)

if __name__ == '__main__':

for t in Processlist:

t.start()

6.2 分布式性能提升测试

6.2.1 构建分布式库表

1、在distributed_test中创建本地表dws_useroper_device_persona_mlb_ds。

CREATE TABLE distributed_test.dws_useroper_device_persona_mlb_ds on cluster mycluster

(

`sr_accept_date` Nullable(String) COMMENT '维修受理完成时间',

`activate_date` Nullable(String) COMMENT '激活日期',

`device_launch_month` Nullable(String) COMMENT '上市月份',

`device_brand` Nullable(String) COMMENT '设备品牌',

`product_line` Nullable(String) COMMENT '产品线',

`product_line_series_name` Nullable(String) COMMENT '产品线设备系列名称',

`new_product_line` Nullable(String) COMMENT '产品线(新)',

`new_product_line_series_name` Nullable(String) COMMENT 'SPDT(新)',

`std_series_name` Nullable(String) COMMENT '标准设备系列名称',

`device_prod_sprdname` Nullable(String) COMMENT '设备产品传播名',

`device_emui_ver` Nullable(String) COMMENT '设备EMUI版本',

`device_price_scope` Nullable(String) COMMENT '设备价格范围',

`rom` Nullable(String) COMMENT 'ROM(GB)',

`ram` Nullable(String) COMMENT 'RAM(GB)',

`device_color` Nullable(String) COMMENT '设备颜色',

`screen_size` Nullable(String) COMMENT '屏幕尺寸',

`resolution` Nullable(String) COMMENT '分辨率',

`ovsea_model_flg` Nullable(String) COMMENT '海外机型标志',

`old_model_flg` Nullable(String) COMMENT '老旧机型标志',

`forecast_gender` Nullable(String) COMMENT '性别',

`forecast_agerange` Nullable(String) COMMENT '年龄范围',

`career` Nullable(String) COMMENT '职业',

`residence_prov` Nullable(String) COMMENT '常驻省份',

`residence_city` Nullable(String) COMMENT '常驻城市',

`residence_city_level` Nullable(String) COMMENT '常驻城市等级',

`device_launch_duration` Nullable(String) COMMENT '设备上市时长(第几个月)',

`usage_duration_segment` Nullable(String) COMMENT '用机时长分段',

`remain_ram_segment` Nullable(String) COMMENT '剩余RAM分段',

`remain_rom_segment` Nullable(String) COMMENT '剩余ROM分段',

`stop_degree_flg` Nullable(String) COMMENT '卡顿程度',

`battery_volume` Nullable(String) COMMENT '电池设计容量(mAh)',

`battery_atnuatn_flg` Nullable(String) COMMENT '电池衰减标志',

`battery_aging_flg` Nullable(String) COMMENT '电池老化标志',

`charge_laps_range` Nullable(String) COMMENT '充电圈数区间',

`battery_loss_range` Nullable(String) COMMENT '电池损耗区间',

`fault_maint_cnt` Nullable(String) COMMENT '故障维修次数',

`maint_method` Nullable(String) COMMENT '故障类维修方法',

`service_solution_name` Nullable(String) COMMENT '服务交付方案名称',

`plug_card_flg` Nullable(String) COMMENT '插卡标志',

`open_user_experi_plan_flg` Nullable(String) COMMENT '开通用户体验计划标志',

`day_new_device_cnt` Nullable(Int64) COMMENT '日增设备数',

`day_active_device_cnt` Nullable(Int64) COMMENT '日活设备数',

`day7_active_device_cnt` Nullable(Int64) COMMENT '近7天活跃设备数',

`day30_active_device_cnt` Nullable(Int64) COMMENT '近30天活跃设备数',

`week_active_device_cnt` Nullable(Int64) COMMENT '当周活跃设备数',

`age_src` Nullable(String) COMMENT '年龄来源',

`gender_src` Nullable(String) COMMENT '性别来源',

`high_credible_flg` Nullable(String) COMMENT '高可信度标志',

`pt_d` Date DEFAULT today() COMMENT '天分区'

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/finebiUserOper_cluster_23shards_2replicas/{shard}/ UserOper_local.dws_useroper_device_persona_mlb_ds_001', '{replica}') PARTITION BY toYYYYMMDD(pt_d)

ORDER BY tuple()

SETTINGS index_granularity = 8192, use_minimalistic_part_header_in_zookeeper = 1, storage_policy = 'default';

2、在所有节点的distributed_test中创建分布式表dws_useroper_device_persona_mlb_ds_all。

CREATE TABLE IF NOT EXISTS

distributed_test.dws_useroper_device_persona_mlb_ds_all ON CLUSTER mycluster AS distributed_test.dws_useroper_device_persona_mlb_ds

ENGINE = Distributed(mycluster, distributed_test, dws_useroper_device_persona_mlb_ds, rand());

3、导入数据进入测试数据准备目录执行。

cat ./dws[1-5].txt| clickhouse --client -h 192.168.58.168 --port 19000 -format_csv_delimiter="," --query="INSERT INTO distributed_test.dws_useroper_device_persona_mlb_ds_all FORMAT CSV";

6.2.2 执行OmniMV运行流程

1、清空system.query_log中distributed_test数据库的查询记录。

alter table system.query_log delete where databases=['distributed_test'];

2、执行cbg的sql。

CBG提供了25条查询SQL。需要将其改写为查询分布式表。

例如dws_useroper_device_persona_mlb_ds_all,改写后SQL如下附件cbg_sqls_distributed_table.txt。

3、配置OmniMV配置文件参考配置如下。

[raw_file]

# 数据库名称

database = distributed_test work_path = ./clickhouse

[clickhouse]

ck_host = 192.168.58.168 ck_port = 19000

# 集群名称(只查询本地表可不配置)

cluster_name = mycluster num_round = 5

# 是否启用clickhouse projection功能[True or False] enable_projection = False

# 创建和删除projection的超时时间,单位(s)

projection_wait_time = 3600

# 数据压缩比: 视图产生的数据行数/sql查询需要处理的行数read_rows,数据压缩比越小越好 projection_compress_ratio = 0.8

# SQL运行的阈值,小于这个时间的sql不考虑推荐视图,单位(ms) sql_running_time_threshold = 1

# 每张表推荐出来的projection数量的上限

projection_limit_each_table = 10

# 推荐Projection时配置表工作模式,为True,则【clickhouse_tables】只能配置分布式表,为False,则

【clickhouse_tables】只能配置本地表 is_distributed_table = True

# 列举一些重点的表来进行推荐,表之间用逗号分隔。在多表场景下,需要填写all, e.g. clickhouse_tables = all

clickhouse_tables = dws_useroper_device_persona_mlb_ds_all

# 指定从哪个系统表中查询query相关信息,一般本地表会从system.query_log中查询信息,分布式表可能从别的表中获取信息

query_log_table = system.query_log

# (初次提取query)从query_log中对每张表提取query信息,提取到的query需要是指定数据库,指定数据表,运行时间超过配置文件中阈值的query数量

# (增量提取query),增量提取的query数量也是该参数指定 query_limit_each_table = 30

# 在多表场景下,配置提取的query总数量 query_limit_mutil_table = 15

# 是否为包含条件的聚合函数sql推荐projection (例如select sum(multiif..)) [True or False]

# 如果为True:则去除条件表达式后推荐,为False:这条sql不推荐projection projection_with_conditional_functions = False

# 是否需要对新建的projection物化历史的数据,默认不物化历史数据 [True or False] allows_materialize_projection = True

# 指定要删除的Projection名称,使用 "table_name.projection_name"进行指定,table_name只能是本地表。多个projection之间使用(英文)逗号分割。

# 如果所有节点都存在此"table_name.projection_name",且都需要删除,需要将【is_distributed_table】配置为True

# 如果不需要删除Projection,请将其置为None,以防止误操作 drop_projections = None

[train]

# 通过对出现过的聚合列进行排列组合,以此来扩大训练数据的数量,这里可以限制 大的扩充的训练sql的数量 max_data_count = 20 epochs = 100 estimators = 50 max_depth = 5 learning_rate = 0.05 [recommend]

# recommend strategy

# options: ['sql', 'compress_ratio']

# 选择compress_ratio表示按照projection压缩比来排序,选择sql,表示按照projection可以匹配sql的数量来排序 candidate_strategy = compress_ratio

4、执行数据库初始化命令。

python main.pyc clickhouse init_database

5、执行提取SQL命令。

python main.pyc clickhouse extract_sqls

虽然配置了每张表提取30条SQL,但清空执行记录后只执行了25条SQL,因此只提取到了25条SQL。

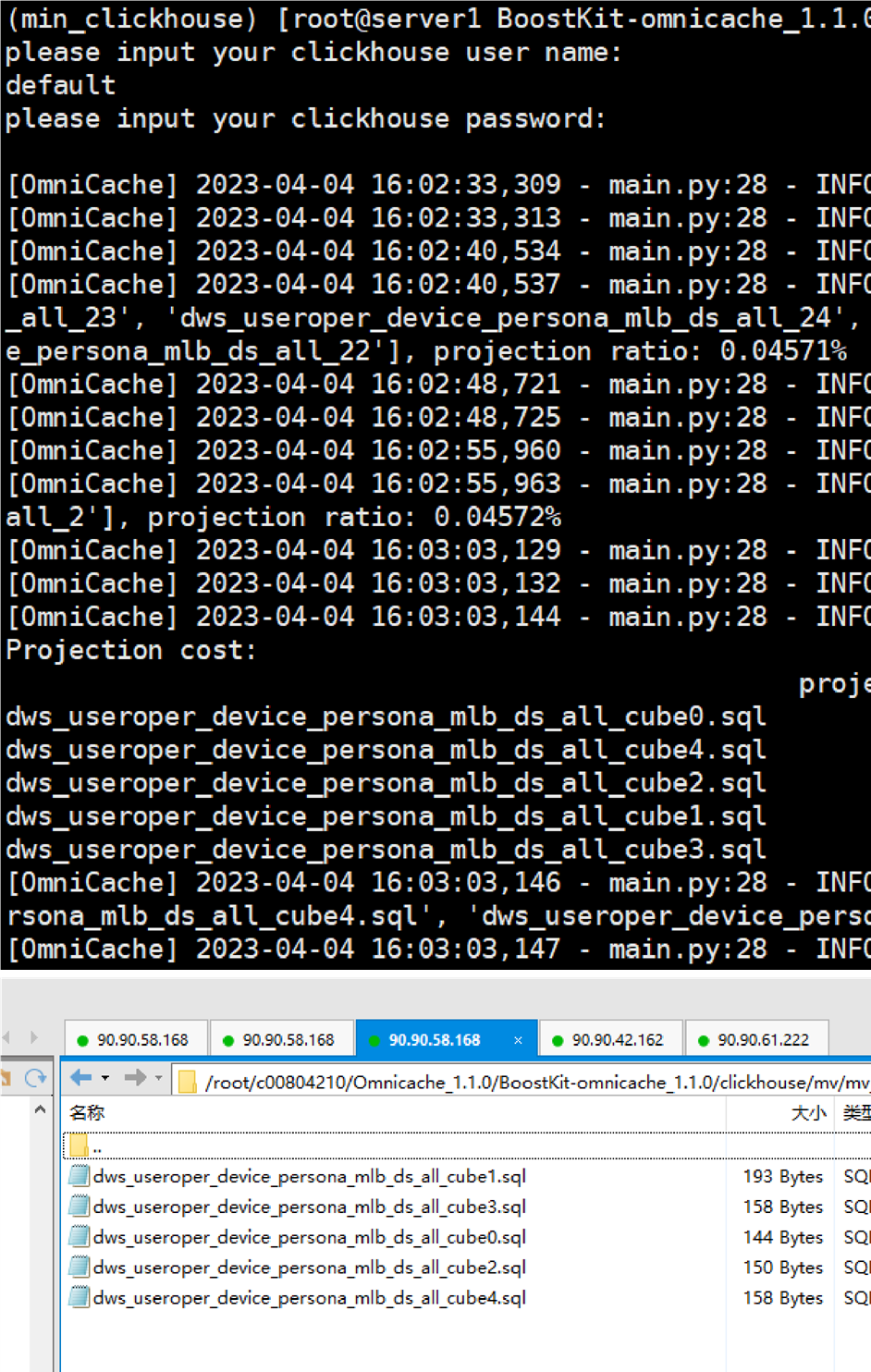

6、执行解析projection命令。

python main.pyc clickhouse generate_views

产生5条projections:

7、创建projections。

python main.pyc clickhouse create_projections

在各个节点的本地表lineorder_flat上均创建了视图。

8、关闭projection,测试25条SQL运行时间。

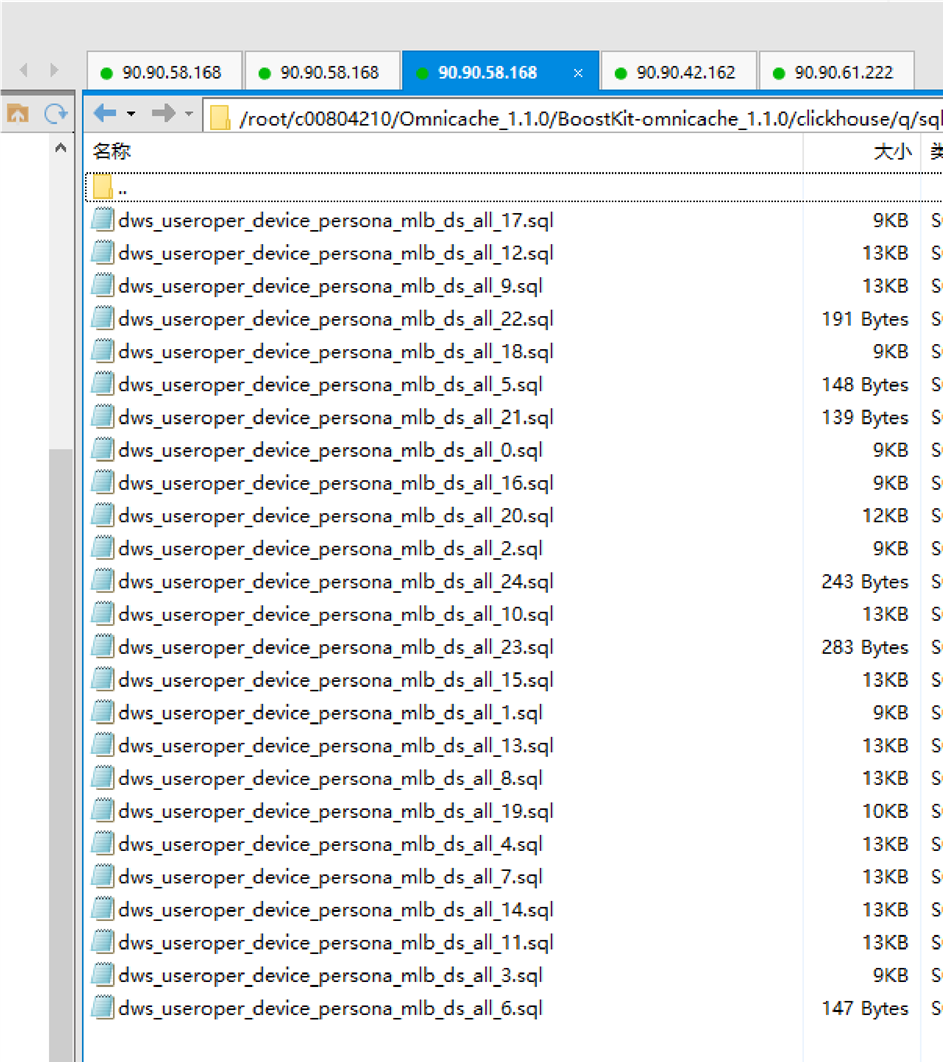

sh measure_performance.sh /tmp/pycharm_project_430/clickhouse 0

/“tmp/pycharm_project_430/clickhouse”参数为sql文件夹路径,第二个参数为是否启用projection,“0”表示禁用,“1”表示启用。sql文件夹路径在配置文件中的work_path下的“/q/sql”中。

脚本输出为:

dws_useroper_device_persona_mlb_ds_all_0.sql五次平均执行时间为:46.6810 秒

dws_useroper_device_persona_mlb_ds_all_10.sql五次平均执行时间为:0.7314 秒

dws_useroper_device_persona_mlb_ds_all_11.sql五次平均执行时间为:0.6443 秒

dws_useroper_device_persona_mlb_ds_all_12.sql五次平均执行时间为:0.6369 秒

dws_useroper_device_persona_mlb_ds_all_13.sql五次平均执行时间为:22.6676 秒

dws_useroper_device_persona_mlb_ds_all_14.sql五次平均执行时间为:0.6935 秒

dws_useroper_device_persona_mlb_ds_all_15.sql五次平均执行时间为:0.5505 秒

dws_useroper_device_persona_mlb_ds_all_16.sql五次平均执行时间为:30.9949 秒

dws_useroper_device_persona_mlb_ds_all_17.sql五次平均执行时间为:26.0273 秒

dws_useroper_device_persona_mlb_ds_all_18.sql五次平均执行时间为:28.7653 秒

dws_useroper_device_persona_mlb_ds_all_19.sql五次平均执行时间为:29.7989 秒

dws_useroper_device_persona_mlb_ds_all_1.sql五次平均执行时间为:30.6384 秒

dws_useroper_device_persona_mlb_ds_all_20.sql五次平均执行时间为:0.4865 秒

dws_useroper_device_persona_mlb_ds_all_21.sql五次平均执行时间为:0.3196 秒

dws_useroper_device_persona_mlb_ds_all_22.sql五次平均执行时间为:5.6267 秒

dws_useroper_device_persona_mlb_ds_all_23.sql五次平均执行时间为:0.0663 秒

dws_useroper_device_persona_mlb_ds_all_24.sql五次平均执行时间为:5.6848 秒

dws_useroper_device_persona_mlb_ds_all_2.sql五次平均执行时间为:45.7762 秒

dws_useroper_device_persona_mlb_ds_all_3.sql五次平均执行时间为:31.0191 秒

dws_useroper_device_persona_mlb_ds_all_4.sql五次平均执行时间为:0.6708 秒

dws_useroper_device_persona_mlb_ds_all_5.sql五次平均执行时间为:0.0796 秒

dws_useroper_device_persona_mlb_ds_all_6.sql五次平均执行时间为:0.0824 秒

dws_useroper_device_persona_mlb_ds_all_7.sql五次平均执行时间为:0.6179 秒

dws_useroper_device_persona_mlb_ds_all_8.sql五次平均执行时间为:0.6788 秒

dws_useroper_device_persona_mlb_ds_all_9.sql五次平均执行时间为:0.6545 秒

9、开启projection,测试25条SQL运行时间,

sh measure_performance.sh /tmp/pycharm_project_430/clickhouse 1

脚本输出为:

dws_useroper_device_persona_mlb_ds_all_0.sql五次平均执行时间为:0.2727 秒

dws_useroper_device_persona_mlb_ds_all_10.sql五次平均执行时间为:0.6687 秒

dws_useroper_device_persona_mlb_ds_all_11.sql五次平均执行时间为:0.6619 秒

dws_useroper_device_persona_mlb_ds_all_12.sql五次平均执行时间为:0.6504 秒

dws_useroper_device_persona_mlb_ds_all_13.sql五次平均执行时间为:22.5307 秒

dws_useroper_device_persona_mlb_ds_all_14.sql五次平均执行时间为:0.7521 秒

dws_useroper_device_persona_mlb_ds_all_15.sql五次平均执行时间为:0.5833 秒

dws_useroper_device_persona_mlb_ds_all_16.sql五次平均执行时间为:0.2670 秒

dws_useroper_device_persona_mlb_ds_all_17.sql五次平均执行时间为:0.2830 秒

dws_useroper_device_persona_mlb_ds_all_18.sql五次平均执行时间为:0.2477 秒

dws_useroper_device_persona_mlb_ds_all_19.sql五次平均执行时间为:0.2553 秒

dws_useroper_device_persona_mlb_ds_all_1.sql五次平均执行时间为:0.2320 秒

dws_useroper_device_persona_mlb_ds_all_20.sql五次平均执行时间为:0.5371 秒

dws_useroper_device_persona_mlb_ds_all_21.sql五次平均执行时间为:0.1113 秒

dws_useroper_device_persona_mlb_ds_all_22.sql五次平均执行时间为:0.1003 秒

dws_useroper_device_persona_mlb_ds_all_23.sql五次平均执行时间为:0.0716 秒

dws_useroper_device_persona_mlb_ds_all_24.sql五次平均执行时间为:0.0876 秒

dws_useroper_device_persona_mlb_ds_all_2.sql五次平均执行时间为:0.2383 秒

dws_useroper_device_persona_mlb_ds_all_3.sql五次平均执行时间为:0.2250 秒

dws_useroper_device_persona_mlb_ds_all_4.sql五次平均执行时间为:0.6925 秒

dws_useroper_device_persona_mlb_ds_all_5.sql五次平均执行时间为:0.0815 秒

dws_useroper_device_persona_mlb_ds_all_6.sql五次平均执行时间为:0.0865 秒

dws_useroper_device_persona_mlb_ds_all_7.sql五次平均执行时间为:0.7086 秒

dws_useroper_device_persona_mlb_ds_all_8.sql五次平均执行时间为:0.8154 秒

dws_useroper_device_persona_mlb_ds_all_9.sql五次平均执行时间为:0.7459 秒

6.2.3 数据分析

|

SQL |

无projection执行时间(秒) |

启用projection执行时间(秒) |

匹配视图号 |

性能提升 |

|

dws_useroper_device_persona_mlb_ds_all_0 |

46.681 |

0.2727 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

1701 8.07 85% |

|

dws_useroper_device_persona_mlb_ds_a ll_10 |

0.7314 |

0.6687 |

9.37 64% | |

|

dws_useroper_device_persona_mlb_ds_a ll_11 |

0.6443 |

0.6619 |

-2.65 90% | |

|

dws_useroper_device_persona_mlb_ds_a ll_12 |

0.6369 |

0.6504 |

-2.07 56% | |

|

dws_useroper_device_persona_mlb_ds_a ll_13 |

22.6676 |

22.5307 |

0.60 76% | |

|

dws_useroper_device_persona_mlb_ds_a ll_14 |

0.6935 |

0.7521 |

-7.79 15% | |

|

dws_useroper_device_persona_mlb_ds_a ll_15 |

0.5505 |

0.5833 |

-5.62 32% | |

|

dws_useroper_device_persona_mlb_ds_a ll_16 |

30.9949 |

0.267 |

dws_useroper_device_ persona_mlb_ds_all_c ube2 |

1150 8.57 68% |

|

dws_useroper_device_persona_mlb_ds_a ll_17 |

26.0273 |

0.283 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

9096 .925 8% |

|

dws_useroper_device_persona_mlb_ds_a ll_18 |

28.7653 |

0.2477 |

dws_useroper_device_ persona_mlb_ds_all_c ube0 |

1151 2.95 92% |

|

dws_useroper_device_persona_mlb_ds_a ll_19 |

29.7989 |

0.2553 |

dws_useroper_device_ persona_mlb_ds_all_c ube4 |

1157 2.11 12% |

|

dws_useroper_device_persona_mlb_ds_all_1 |

30.6384 |

0.232 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

1310 6.20 69% |

|

dws_useroper_device_persona_mlb_ds_a ll_20 |

0.4865 |

0.5371 |

-9.42 10% | |

|

dws_useroper_device_persona_mlb_ds_a ll_21 |

0.3196 |

0.1113 |

dws_useroper_device_ persona_mlb_ds_all_c ube0 |

187. 1518 % |

|

dws_useroper_device_persona_mlb_ds_a ll_22 |

5.6267 |

0.1003 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

5509 .870 4% |

|

dws_useroper_device_persona_mlb_ds_a ll_23 |

0.0663 |

0.0716 |

-7.40 22% | |

|

dws_useroper_device_persona_mlb_ds_a ll_24 |

5.6848 |

0.0876 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

6389 .497 7% |

|

dws_useroper_device_persona_mlb_ds_all_2 |

45.7762 |

0.2383 |

dws_useroper_device_ persona_mlb_ds_all_c ube3 |

1910 9.48 38% |

|

dws_useroper_device_persona_mlb_ds_all_3 |

31.0191 |

0.225 |

dws_useroper_device_ persona_mlb_ds_all_c ube3 |

1368 6.26 67% |

|

dws_useroper_device_persona_mlb_ds_all_4 |

0.6708 |

0.6925 |

-3.13 36% | |

|

dws_useroper_device_persona_mlb_ds_all_5 |

0.0796 |

0.0815 |

-2.33 13% | |

|

dws_useroper_device_persona_mlb_ds_all_6 |

0.0824 |

0.0865 |

-4.73 99% | |

|

dws_useroper_device_persona_mlb_ds_all_7 |

0.6179 |

0.7086 |

-12.7 999 % | |

|

dws_useroper_device_persona_mlb_ds_all_8 |

0.6788 |

0.8154 |

-16.7 525 % | |

|

dws_useroper_device_persona_mlb_ds_all_9 |

0.6545 |

0.7459 |

-12.2 537 % | |

|

总计 |

310.5932 |

31.9064 |

873. 4511 % |

6.3 单机性能提升测试

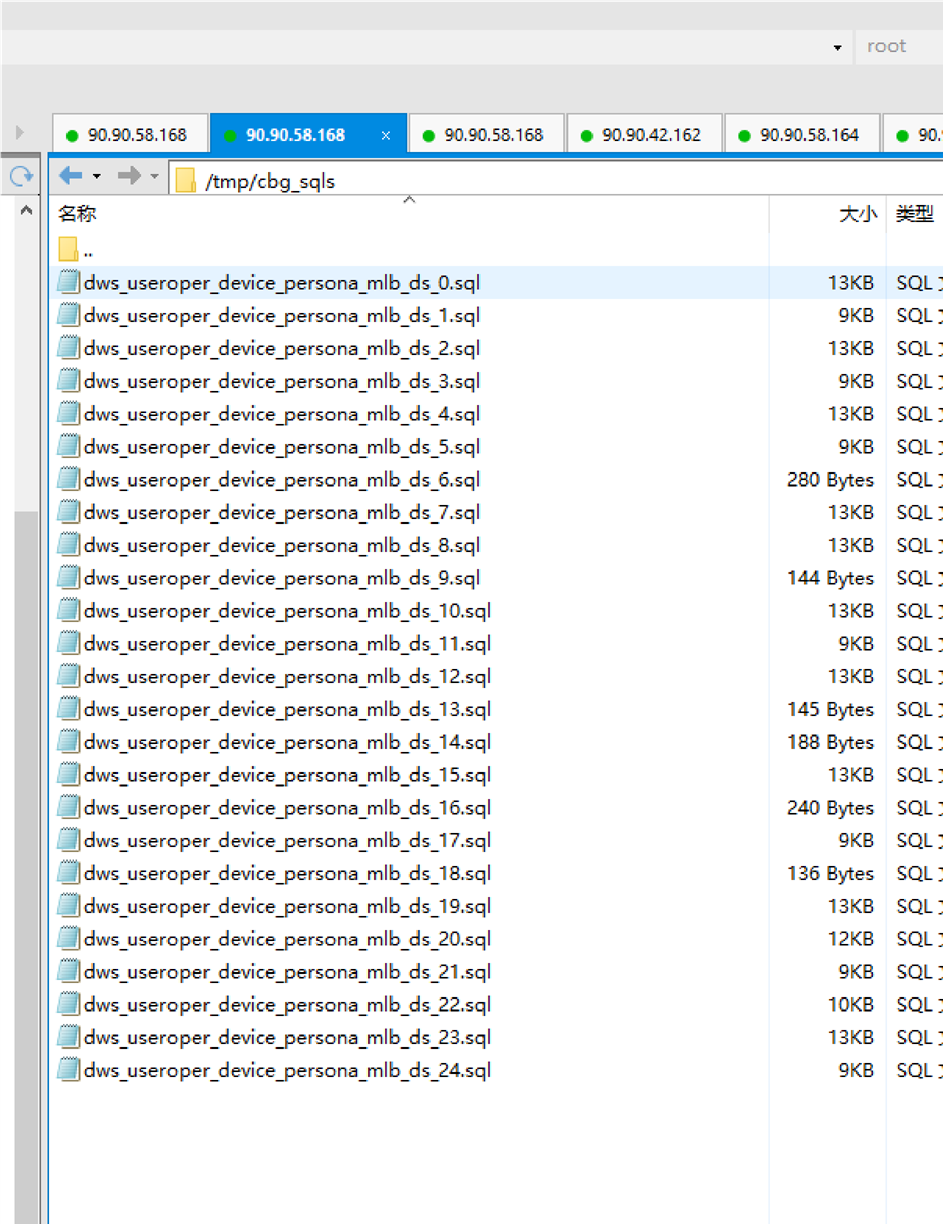

1、将以下SQL语句(查询本地表dws_useroper_device_persona_mlb_ds)分别输入.sql文件中,并放置于“/tmp/cbg_sqls”目录下。

如下图所示:

因为分布式性能测试中的每个节点的distributed_test.dws_useroper_device_persona_mlb_ds都有10亿行数据,且构建了projection。这里直接使用一台节点进行单机性能提升测试。

2、测试关闭projection的执行时间。

sh measure_performance.sh /tmp/cbg_sqls 0

脚本输出为:

dws_useroper_device_persona_mlb_ds_0.sql五次平均执行时间为:0.5102 秒

dws_useroper_device_persona_mlb_ds_10.sql五次平均执行时间为:0.4733 秒

dws_useroper_device_persona_mlb_ds_11.sql五次平均执行时间为:20.4022 秒

dws_useroper_device_persona_mlb_ds_12.sql五次平均执行时间为:0.4961 秒

dws_useroper_device_persona_mlb_ds_13.sql五次平均执行时间为:0.0726 秒

dws_useroper_device_persona_mlb_ds_14.sql五次平均执行时间为:1.8206 秒

dws_useroper_device_persona_mlb_ds_15.sql五次平均执行时间为:0.5058 秒

dws_useroper_device_persona_mlb_ds_16.sql五次平均执行时间为:1.7877 秒

dws_useroper_device_persona_mlb_ds_17.sql五次平均执行时间为:20.6862 秒

dws_useroper_device_persona_mlb_ds_18.sql五次平均执行时间为:0.2373 秒

dws_useroper_device_persona_mlb_ds_19.sql五次平均执行时间为:0.4287 秒

dws_useroper_device_persona_mlb_ds_1.sql五次平均执行时间为:20.7353 秒

dws_useroper_device_persona_mlb_ds_20.sql五次平均执行时间为:0.4021 秒

dws_useroper_device_persona_mlb_ds_21.sql五次平均执行时间为:17.8188 秒

dws_useroper_device_persona_mlb_ds_22.sql五次平均执行时间为:20.6722 秒

dws_useroper_device_persona_mlb_ds_23.sql五次平均执行时间为:0.5312 秒

dws_useroper_device_persona_mlb_ds_24.sql五次平均执行时间为:34.8628 秒

dws_useroper_device_persona_mlb_ds_2.sql五次平均执行时间为:0.5608 秒

dws_useroper_device_persona_mlb_ds_3.sql五次平均执行时间为:34.9922 秒

dws_useroper_device_persona_mlb_ds_4.sql五次平均执行时间为:0.4801 秒

dws_useroper_device_persona_mlb_ds_5.sql五次平均执行时间为:20.6449 秒

dws_useroper_device_persona_mlb_ds_6.sql五次平均执行时间为:0.0573 秒

dws_useroper_device_persona_mlb_ds_7.sql五次平均执行时间为:16.3198 秒

dws_useroper_device_persona_mlb_ds_8.sql五次平均执行时间为:0.5600 秒

dws_useroper_device_persona_mlb_ds_9.sql五次平均执行时间为:0.0723 秒

3、测试开启projection性能。

sh measure_performance.sh /tmp/cbg_sqls 1

脚本输出为:

dws_useroper_device_persona_mlb_ds_0.sql五次平均执行时间为:0.5162 秒

dws_useroper_device_persona_mlb_ds_10.sql五次平均执行时间为:0.4820 秒

dws_useroper_device_persona_mlb_ds_11.sql五次平均执行时间为:0.1564 秒

dws_useroper_device_persona_mlb_ds_12.sql五次平均执行时间为:0.4887 秒

dws_useroper_device_persona_mlb_ds_13.sql五次平均执行时间为:0.0701 秒

dws_useroper_device_persona_mlb_ds_14.sql五次平均执行时间为:0.0860 秒

dws_useroper_device_persona_mlb_ds_15.sql五次平均执行时间为:0.4759 秒

dws_useroper_device_persona_mlb_ds_16.sql五次平均执行时间为:0.0749 秒

dws_useroper_device_persona_mlb_ds_17.sql五次平均执行时间为:0.1364 秒

dws_useroper_device_persona_mlb_ds_18.sql五次平均执行时间为:0.0741 秒

dws_useroper_device_persona_mlb_ds_19.sql五次平均执行时间为:0.4211 秒

dws_useroper_device_persona_mlb_ds_1.sql五次平均执行时间为:0.1462 秒

dws_useroper_device_persona_mlb_ds_20.sql五次平均执行时间为:0.4020 秒

dws_useroper_device_persona_mlb_ds_21.sql五次平均执行时间为:0.1543 秒

dws_useroper_device_persona_mlb_ds_22.sql五次平均执行时间为:0.1579 秒

dws_useroper_device_persona_mlb_ds_23.sql五次平均执行时间为:0.5089 秒

dws_useroper_device_persona_mlb_ds_24.sql五次平均执行时间为:0.1411 秒

dws_useroper_device_persona_mlb_ds_2.sql五次平均执行时间为:0.5367 秒

dws_useroper_device_persona_mlb_ds_3.sql五次平均执行时间为:0.1512 秒

dws_useroper_device_persona_mlb_ds_4.sql五次平均执行时间为:0.4890 秒

dws_useroper_device_persona_mlb_ds_5.sql五次平均执行时间为:0.1532 秒

dws_useroper_device_persona_mlb_ds_6.sql五次平均执行时间为:0.0587 秒

dws_useroper_device_persona_mlb_ds_7.sql五次平均执行时间为:16.2735 秒

dws_useroper_device_persona_mlb_ds_8.sql五次平均执行时间为:0.5896 秒

dws_useroper_device_persona_mlb_ds_9.sql五次平均执行时间为:0.0739 秒

数据分析:

|

SQL |

无projection执行时间(秒) |

启用projection执行时间(秒) |

匹配视图号 |

性能提升 |

|

dws_useroper_device_persona_mlb_ds_all_0 |

0.5102 |

0.5162 |

-1.16 23% | |

|

dws_useroper_device_persona_mlb_ds_a ll_10 |

0.4733 |

0.482 |

-1.80 50% | |

|

dws_useroper_device_persona_mlb_ds_a ll_11 |

20.4022 |

0.1564 |

dws_useroper_device_ persona_mlb_ds_all_c ube2 |

1294 4.88 49% |

|

dws_useroper_device_persona_mlb_ds_a ll_12 |

0.4961 |

0.4887 |

1.51 42% | |

|

dws_useroper_device_persona_mlb_ds_a ll_13 |

0.0726 |

0.0701 |

3.56 63% | |

|

dws_useroper_device_persona_mlb_ds_a ll_cube1 |

1.8206 |

0.086 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

2016 .976 7% |

|

dws_useroper_device_persona_mlb_ds_a ll_15 |

0.5058 |

0.4759 |

6.28 28% | |

|

dws_useroper_device_persona_mlb_ds_a ll_16 |

1.7877 |

0.0749 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

2286 .782 4% |

|

dws_useroper_device_persona_mlb_ds_a ll_17 |

20.6862 |

0.1364 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

1506 5.83 58% |

|

dws_useroper_device_persona_mlb_ds_a ll_18 |

0.2373 |

0.0741 |

dws_useroper_device_ persona_mlb_ds_all_c ube0 |

220. 2429 % |

|

dws_useroper_device_persona_mlb_ds_a ll_19 |

0.4287 |

0.4211 |

1.80 48% | |

|

dws_useroper_device_persona_mlb_ds_all_1 |

20.7353 |

0.1462 |

dws_useroper_device_ persona_mlb_ds_all_c ube3 |

1408 2.83 17% |

|

dws_useroper_device_persona_mlb_ds_a ll_20 |

0.4021 |

0.402 |

0.02 49% | |

|

dws_useroper_device_persona_mlb_ds_a ll_21 |

17.8188 |

0.1543 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

1144 8.15 29% |

|

dws_useroper_device_persona_mlb_ds_a ll_22 |

20.6722 |

0.1579 |

dws_useroper_device_ persona_mlb_ds_all_c ube4 |

1299 1.95 69% |

|

dws_useroper_device_persona_mlb_ds_a ll_23 |

0.5312 |

0.5089 |

4.38 20% | |

|

dws_useroper_device_persona_mlb_ds_a ll_24 |

34.8628 |

0.1411 |

dws_useroper_device_ persona_mlb_ds_all_c ube3 |

2460 7.86 68% |

|

dws_useroper_device_persona_mlb_ds_all_2 |

0.5608 |

0.5367 |

4.49 04% | |

|

dws_useroper_device_persona_mlb_ds_all_3 |

34.9922 |

0.1512 |

dws_useroper_device_ persona_mlb_ds_all_c ube1 |

2304 2.98 94% |

|

dws_useroper_device_persona_mlb_ds_all_4 |

0.4801 |

0.489 |

-1.82 00% | |

|

dws_useroper_device_persona_mlb_ds_all_5 |

20.6449 |

0.1532 |

dws_useroper_device_ persona_mlb_ds_all_c ube0 |

1337 5.78 33% |

|

dws_useroper_device_persona_mlb_ds_all_6 |

0.0573 |

0.0587 |

-2.38 50% | |

|

dws_useroper_device_persona_mlb_ds_all_7 |

16.3198 |

16.2735 |

0.28 45% | |

|

dws_useroper_device_persona_mlb_ds_all_8 |

0.56 |

0.5896 |

-5.02 04% | |

|

dws_useroper_device_persona_mlb_ds_all_9 |

0.0723 |

0.0739 |

-2.16 51% | |

|

总计 |

216.1305 |

22.818 |

847. 1930 % |