- 以PySpark任务提交的独立Python包中为ARM版本Python为例说明。

- 集群为x86与ARM的混合部署Spark集群。

- 任务脚本“/opt/test_spark.py”为举例脚本,可用其它PySpark任务替代。

- test_spark.py脚本内容如下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

# test_spark.py import os import sys from pyspark import SparkContext from pyspark import SparkConf conf = SparkConf() conf.setAppName("get-hosts") sc = SparkContext(conf=conf) def noop(x): import socket import sys return socket.gethostname() + ' '.join(sys.path) + ' '.join(os.environ) rdd = sc.parallelize(range(1000), 100) hosts = rdd.map(noop).distinct().collect() print(hosts)

- 提交PySpark任务。

1PYSPARK_PYTHON=./ANACONDA/mlpy_env/bin/python spark-submit --conf spark.yarn.appMasterEnv.PYSPARK_PYTHON=./ANACONDA/mlpy_env/bin/python --conf spark.executorEnv.PYSPARK_PYTHON=./ANACONDA/mlpy_env/bin/python --master yarn-cluster --archives /opt/mlpy_env.zip#ANACONDA /opt/test_spark.py

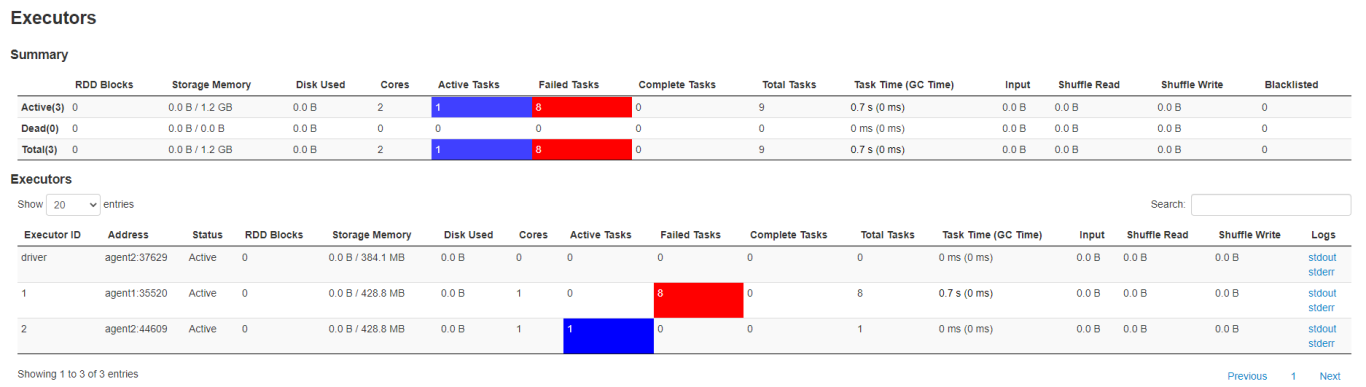

- 分析Spark2 History服务中的任务运行情况。

executors运行情况如下图:

其中,agent1为x86服务器,agent2为ARM服务器。agent1上运行失败是由于独立Python包中打包的是ARM版本的Python,导致在x86服务器上运行失败。

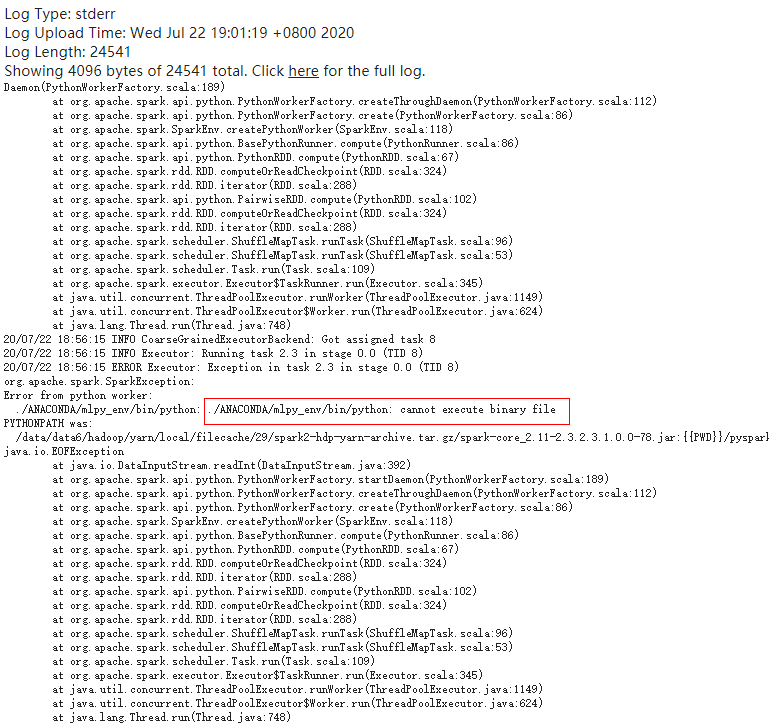

运行失败executor的错误日志为:

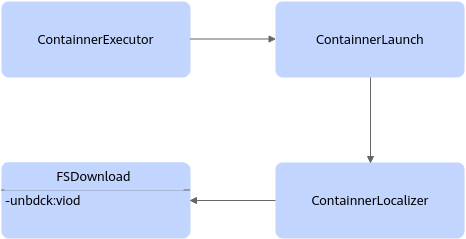

- 分析archives文件“opt/mlpy_env.zip”流转过程。