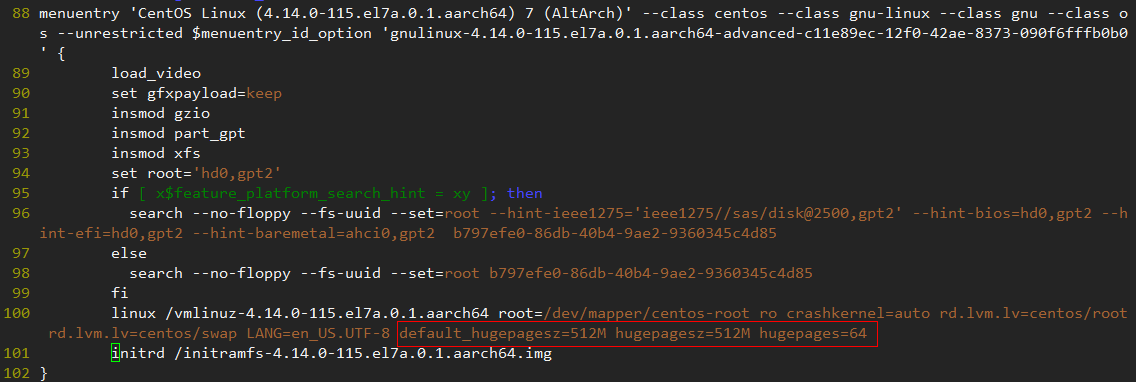

- 设置内存大页,编辑开机启动项。

1vim /etc/grub2-efi.cfg

增加“default_hugepagesz=512M hugepagesz=512M hugepages=64”。

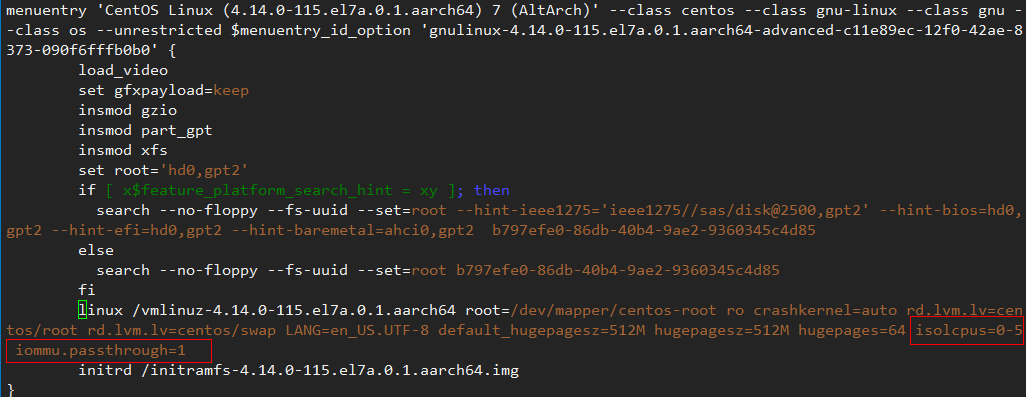

- 开启IOMMU及CPU隔离。

- 编辑文件“/etc/grub2-efi.cfg”。

1vim /etc/grub2-efi.cfg

- 增加文本“isolcpus=0-5 iommu.passthrough=1”。

- 编辑文件“/etc/grub2-efi.cfg”。

- 重启机器生效。

- 启动OVS。

- 创建OVS工作目录。

1 2

mkdir -p /var/run/openvswitch mkdir -p /var/log/openvswitch

- 创建OVS数据库文件。

1ovsdb-tool create /etc/openvswitch/conf.db

- 启动ovsdb-server。

1ovsdb-server --remote=punix:/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,Open_vSwitch,manager_options --private-key=db:Open_vSwitch,SSL,private_key --certificate=db:Open_vSwitch,SSL,certificate --bootstrap-ca-cert=db:Open_vSwitch,SSL,ca_cert --pidfile --detach --log-file

- 设置OVS启动参数。

1ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true other_config:dpdk-socket-mem="4096" other_config:dpdk-lcore-mask="0x1F" other_config:pmd-cpu-mask="0x1E"

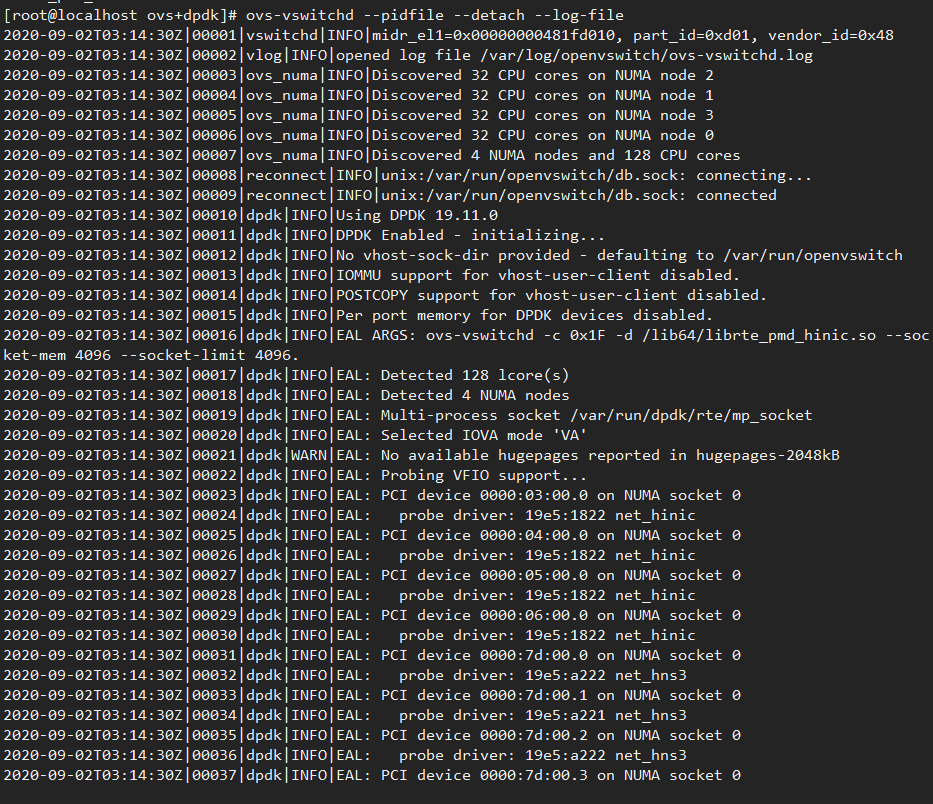

- 启动OVS。

1ovs-vswitchd --pidfile --detach --log-file

- 创建OVS工作目录。

- 将网卡绑定到DPDK用户态。

- 加载igb_uio驱动。

1modprobe igb_uio

首次执行时,需先执行depmod,让系统处理驱动依赖关系。该驱动由DPDK提供,安装DPDK时默认安装在“/lib/modules/4.14.0-115.el7a.0.1.aarch64/extra/dpdk/igb_uio.ko”。重启系统后需要重新加载。

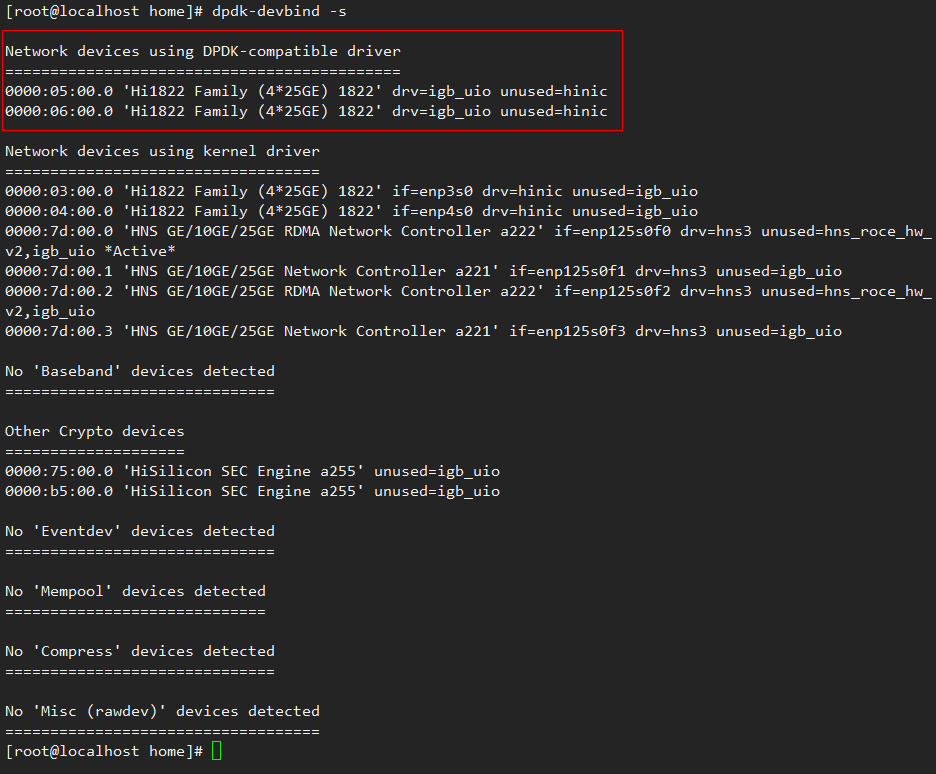

- 查看网口信息。

1dpdk-devbind -s

找到需要绑定网口的PCI地址。

被绑定的网口需要在down的状态,不能是正在使用的网卡,否则绑定将失败。

- 执行绑定。

1 2

dpdk-devbind --bind=igb_uio 0000:05:00.0 dpdk-devbind --bind=igb_uio 0000:06:00.0

若需回退该操作,解绑命令如下:

1dpdk-devbind -u 0000:05:00.0 - 再次查看,验证是否绑定成功。

- 加载igb_uio驱动。

- 创建组网。

验证的组网是OVS经典组网,组网拓扑图如图1。

以下列出在Host1上组建的命令,Host2上基本一致,除了Host2的br-dpdk的IP地址不一样。

- 添加并配置br-dpdk网桥。

1 2 3 4 5

ovs-vsctl add-br br-dpdk -- set bridge br-dpdk datapath_type=netdev ovs-vsctl add-bond br-dpdk dpdk-bond p0 p1 -- set Interface p0 type=dpdk options:dpdk-devargs=0000:05:00.0 -- set Interface p1 type=dpdk options:dpdk-devargs=0000:06:00.0 ovs-vsctl set port dpdk-bond bond_mode=balance-tcp ovs-vsctl set port dpdk-bond lacp=active ifconfig br-dpdk 192.168.2.1/24 up

ifconfig br-dpdk 192.168.2.1/24 up这个是配置br-dpdk网桥的IP地址,用来配置VXLAN隧道。Host2上执行ifconfig br-dpdk 192.168.2.2/24 up来配对,隧道的网段和虚拟机的网段区别开来。

- 添加并配置br-int网桥。

1 2

ovs-vsctl add-br br-int -- set bridge br-int datapath_type=netdev ovs-vsctl add-port br-int vxlan0 -- set Interface vxlan0 type=vxlan options:local_ip=192.168.2.1 options:remote_ip=192.168.2.2

该组网下br-int上有一个VXLAN端口,出主机的流量都会加上VXLAN头。而VXLAN口的local_ip填的是本主机br-dpdk的IP地址,remote_ip是对端br-dpdk的IP地址。

- 添加并配置br-plyn网桥。

1 2 3 4

ovs-vsctl add-br br-ply1 -- set bridge br-ply1 datapath_type=netdev ovs-vsctl add-port br-ply1 tap1 -- set Interface tap1 type=dpdkvhostuserclient options:vhost-server-path=/var/run/openvswitch/tap1 ovs-vsctl add-port br-ply1 p-tap1-int -- set Interface p-tap1-int type=patch options:peer=p-tap1 ovs-vsctl add-port br-int p-tap1 -- set Interface p-tap1 type=patch options:peer=p-tap1-int

该组网每增加一个虚拟机,就增加一个br-ply网桥,该网桥上有一个dpdkvhostuser给虚拟机,patch口连到br-int网桥上。

- 验证组网。

1ovs-vsctl show

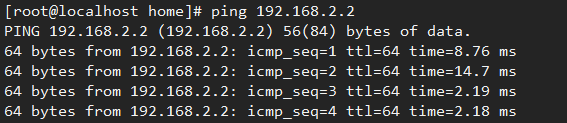

- 验证是否和对端的br-dpdk网桥联通。

1ping 192.168.2.2

- 添加并配置br-dpdk网桥。

- 启动虚拟机。虚拟机配置需要注意内存大页、网口配置,以下的虚拟机配置文件可以参考:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104

<domain type='kvm'> <name>VM1</name> <uuid>fb8eb9ff-21a7-42ad-b233-2a6e0470e0b5</uuid> <memory unit='KiB'>2097152</memory> <currentMemory unit='KiB'>2097152</currentMemory> <memoryBacking> <hugepages> <page size='524288' unit='KiB' nodeset='0'/> </hugepages> <locked/> </memoryBacking> <vcpu placement='static'>4</vcpu> <cputune> <vcpupin vcpu='0' cpuset='6'/> <vcpupin vcpu='1' cpuset='7'/> <vcpupin vcpu='2' cpuset='8'/> <vcpupin vcpu='3' cpuset='9'/> <emulatorpin cpuset='0-3'/> </cputune> <numatune> <memory mode='strict' nodeset='0'/> </numatune> <os> <type arch='aarch64' machine='virt-rhel7.6.0'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/AAVMF/AAVMF_CODE.fd</loader> <nvram>/var/lib/libvirt/qemu/nvram/VM1_VARS.fd</nvram> <boot dev='hd'/> </os> <features> <acpi/> <gic version='3'/> </features> <cpu mode='host-passthrough' check='none'> <topology sockets='1' cores='4' threads='1'/> <numa> <cell id='0' cpus='0-3' memory='2097152' unit='KiB' memAccess='shared'/> </numa> </cpu> <clock offset='utc'/> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <devices> <emulator>/usr/libexec/qemu-kvm</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/home/kvm/images/1.img'/> <target dev='vda' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <target dev='sda' bus='scsi'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <controller type='usb' index='0' model='qemu-xhci' ports='8'> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <controller type='scsi' index='0' model='virtio-scsi'> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </controller> <controller type='pci' index='0' model='pcie-root'/> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x8'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x9'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0xa'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0xb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0xc'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/> </controller> <interface type='vhostuser'> <source type='unix' path='/var/run/openvswitch/tap1' mode='server'/> <target dev='tap1'/> <model type='virtio'/> <driver name='vhost' queues='4' rx_queue_size='1024' tx_queue_size='1024'/> </interface> <serial type='pty'> <target type='system-serial' port='0'> <model name='pl011'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> </devices> </domain>

- memoryBacking标签指明在主机哪种规格内存大页上申请内存,由于主机配置的512M内存大页,所以这里保持一致。

- numatune标签指明内存在主机哪个node上申请,这里和网卡node保持一致。

- numa子标签指明虚拟机内存的模式,这里配置了vhostuser的虚拟网口,虚拟机需要有共享的内存大页。

- interface标签定义虚拟机的虚拟网口:

- source的path指定了主机和虚拟机通信的套接字文件位置,mode指明虚拟机套接字类型,由于OVS端配置的是dpdkvhostuserclient,为客户端模式,所以这里配置服务模式。

- target指明虚拟机使用的套接字名称。

- driver指明虚拟机使用的驱动,并指明了队列及队列深度。这里使用的是vhostuser的驱动。

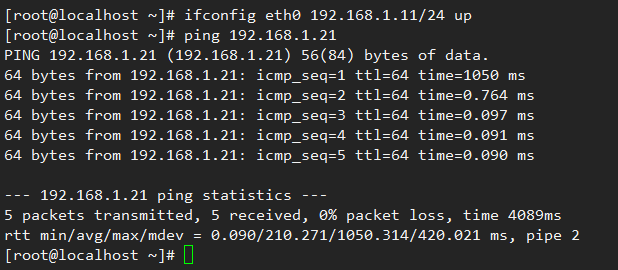

- 跨主机虚拟机连通性验证。

Host1主机的虚拟机内验证是否和Host2主机内的虚拟机联通。

1ping 192.168.1.21

由于VXLAN头增加了数据长度,主机上的默认MTU是1500,虚拟机内需要减少MTU长度才能正常通信,虚拟机内网口建议MTU长度为1400。