启动zookeeper服务和GlobalCache服务需要使用globalcacheop用户。

配置rbd qemu

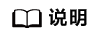

- 修改qemu配置文件,删除user和group的注释。

vi /etc/libvirt/qemu.conf

- 配置Globalcache环境变量到libvirtd服务

vi /usr/lib/systemd/system/libvirtd.service

在【service】字段中,添加以下内容:

Environment="LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/gcache_adaptor_compile/third_part/lib/" Environment="C_INCLUDE_PATH=$C_INCLUDE_PATH:/opt/gcache_adaptor_compile/third_part/inc/"

- 重新启动libvirt服务

systemctl daemon-reload systemctl start libvirtd

启动ccm-zk

- 登录globalcacheop

- 进入“/opt/apache-zookeeper-3.6.3-bin/bin”目录

1cd /opt/apache-zookeeper-3.6.3-bin/bin

- 启动zkServer。

1sh zkServer.sh start

- 查询zkServer状态。

1sh zkServer.sh status

如果是集群状态最后会有一个模式,多台机器会根据某种算法产生一个leader和多个follower。

1Mode: follower / Mode: leader (集群模式)

若要终止ZooKeeper服务端,执行命令:

1sh zkServer.sh stop

启动bcm-zk

- 登录globalcacheop。

- 进入“/opt/apache-zookeeper-3.6.3-bin-bcm/bin”目录。

1cd /opt/apache-zookeeper-3.6.3-bin-bcm/bin

- 启动zkServer。

1sh zkServer.sh start

- 查询zkServer状态。

1sh zkServer.sh status

如果是集群状态最后会有一个模式,多台机器会根据某种算法产生一个leader和多个follower。

1Mode: follower / Mode: leader (集群模式)

若要终止ZooKeeper服务端,执行命令:

1sh zkServer.sh stop

zookeeper清理

- 登录globalcacheop。

- ccm zk建立zk_clean.sh。

1 2 3 4 5 6 7 8 9 10 11 12 13 14

ZK_CLI_PATH="/opt/apache-zookeeper-3.6.3-bin/bin/zkCli.sh" echo 'deleteall /ccdb' >> ./zk_clear.txt echo 'deleteall /ccm_cluster' >> ./zk_clear.txt echo 'deleteall /pool' >> ./zk_clear.txt echo 'deleteall /pt_view' >> ./zk_clear.txt echo 'deleteall /alarm' >> ./zk_clear.txt echo 'deleteall /snapshot_manager' >> ./zk_clear.txt echo 'deleteall /ccm_clusternet_link' >> ./zk_clear.txt echo 'deleteall /tls' >> ./zk_clear.txt echo 'ls /' >> ./zk_clear.txt echo 'quit' >> ./zk_clear.txt cat < ./zk_clear.txt | sh ${ZK_CLI_PATH} echo > ./zk_clear.txt rm -rf ./zk_clear.txt

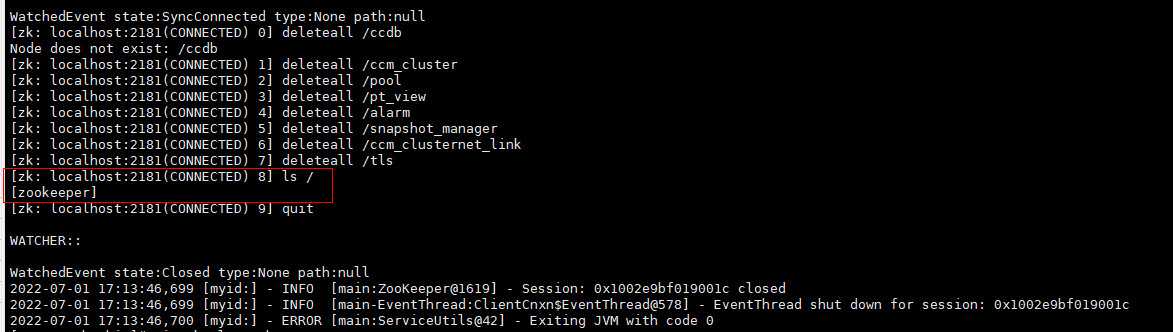

- ccm zk 执行zk_clean.sh。

sh zk_clean.sh

- 检查ZooKeeper是否清理干净,[]中仅有ZooKeeper为清理成功。

- bcm zk建立bcm_zk_clear.sh。

1 2 3 4 5 6 7

ZK_CLI_PATH="/opt/apache-zookeeper-3.6.3-bin-bcm/bin/zkCli.sh -server localhost:2182" echo 'deleteall /bcm_cluster' >> ./zk_clear.txt echo 'ls /' >> ./zk_clear.txt echo 'quit' >> ./zk_clear.txt cat < ./zk_clear.txt | sh ${ZK_CLI_PATH} echo > ./zk_clear.txt rm -rf ./zk_clear.txt

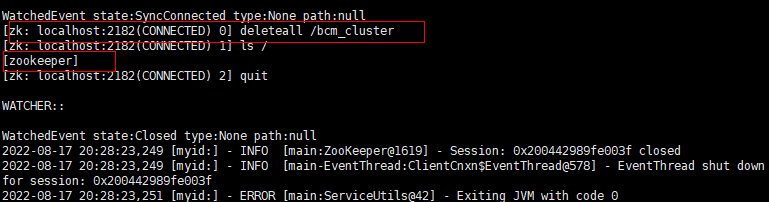

- bcm zk 执行bcm_zk_clear.sh。

sh bcm_zk_clear.sh

- 检查ZooKeeper是否清理干净,[]中仅有ZooKeeper为清理成功。

确保ZooKeeper清理干净才可正常启动GlobalCache,否则会产生预期外的错误。

启动GlobalCache

- 安装部署完服务端和客户端后,登录用户globalcacheop,在所有server节点启动服务端。

- 服务端需保证至少180G内存。

- 为防止服务端因刷新日志的buffer/cache占用过多且free内存长时间不回收,建议卸载openEuler-performance优化包并修改内核配置。

- echo 20971520 > /proc/sys/vm/min_free_kbytes

- 调优项建议参考《鲲鹏boostKit分布式存储调优指南》

1sudo systemctl start GlobalCache.target

- 开启新的服务端终端,检查各pool占用情况。

1 2 3

export LD_LIBRARY_PATH="/opt/gcache/lib" cd /opt/gcache/bin ./bdm_df

查看各pool占用情况是否发生合理变化。

验证GlobalCache

- 在客户端上执行发io和fio的命令,检查服务端和客户端之间是否可以进行正常的读写操作以及性能情况。

- 生效环境变量。

1source /etc/profile

- 在客户端Ceph创建pool和请求。

1ceph osd pool create rbd 128

- ceph df查看pool的id

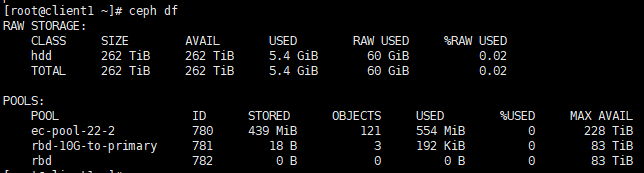

1ceph df如下,rbd的pool id为782

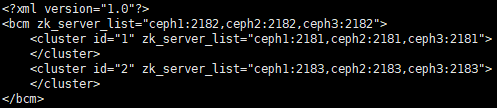

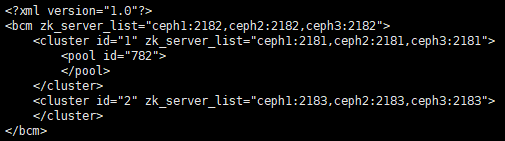

- 将rbd 的pool id更新到bcm.xml,并使用bcm_tool重新执行导入。

下图为bcm.xml更新后(这里只做pool增量更新的说明,详细的参考bcmtool使用。)

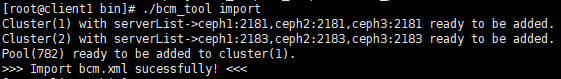

执行导入动作。

执行导入动作。1 2

cd /opt/gcache_adaptor_compile/third_part/bin/ ./bcmtool_c import

导入成功的信息如下图。

- 在客户端Ceph创建image请求。

1rbd create foo --size 1G --pool rbd --image-format 2 --image-feature layering

- 进行IO测试。

1rbd bench --io-type rw --io-pattern rand --io-total 4K --io-size 4K rbd/foo

- 使用fio工具进行读写操作。

1 2

fio -name=test -ioengine=rbd -clientname=admin -pool=rbd -rbdname=foo -direct=1 -size=8K -bs=4K -rw=write --verify_pattern=0x12345678 fio -name=test -ioengine=rbd -clientname=admin -pool=rbd -rbdname=foo -direct=1 -size=4K -bs=4K -rw=write --verify_pattern=0x8888888 -offset=4K

- 查看Ceph Pool状态和image信息。

1 2

ceph df rbd -p rbd --image foo info

- 生效环境变量。

- 在服务端查看是否正确写入到Global Cache。

1 2

cd /opt/gcache/bin ./bdm_df