目的

相比使用内核的irqbalance使网卡中断在所有核上进行调度,使用手动绑核将中断固定住能有效提高业务网络收发包的能力。

方法

- 关闭irqbalance。

若要对网卡进行绑核操作,则需要关闭irqbalance。

- 停止irqbalance服务,重启失效。

1systemctl stop irqbalance.service

- 关闭irqbalance服务,永久有效。

1systemctl disable irqbalance.service

- 查看irqbalance服务状态是否已关闭。

1systemctl status irqbalance.service

- 停止irqbalance服务,重启失效。

- 创建中断绑核脚本。

- 创建一个名为bind_irq.sh的脚本文件。

vi bind_irq.sh

- 按“i”进入编辑模式,在文件中添加如下shell脚本内容。

#!/bin/bash # set -x net_name=$1 irq_cores=$2 irq_cnt=$3 cpu_cores="" numa_nodes="" bus_info="" core_num_per_node="" net_numa="" irq_id_array="" irq_core_list="" irq_core_list_sz="" function get_info() { if [[ ${net_name} == "" ]]; then echo "please specify the network interface parameter" exit fi max_irq_cnt=$(ethtool -l ${net_name} | grep "^Combined:" | head -n1 | awk '{print $2}') if [[ ${irq_cnt} == "" ]]; then irq_cnt=$(ethtool -l ${net_name} | grep "^Combined:" | head -n1 | awk '{print $2}') elif [[ ${irq_cnt} -gt ${max_irq_cnt} ]]; then echo "the max combined is ${max_irq_cnt}, ${irq_cnt} is too large" exit fi if [[ ${irq_cnt} == "" ]]; then echo "get combined number failed" exit fi cpu_cores=`nproc` numa_nodes=$(lscpu | grep "NUMA node(s):\|NUMA 节点:" | awk '{print $3}') bus_info=$(ethtool -i ${net_name} | grep "^bus-info:" | awk '{print $2}') ((core_num_per_node = ${cpu_cores} / ${numa_nodes})) net_numa=$(lspci -vvvs ${bus_info} | grep "NUMA node:" | awk '{print $3}') echo "cpu cores: ${cpu_cores}" echo "cpu numa nodes: ${numa_nodes}" echo "cpu cores per numa: ${core_num_per_node}" echo "network interface: ${net_name}" echo "combined: ${irq_cnt}" echo "businfo: ${bus_info}" echo "network numa: ${net_numa}" } function parse_cpu_list() { IFS_bak=$IFS IFS=',' cpurange=($1) IFS=${IFS_bak} irq_core_list=() n=0 for i in ${cpurange[@]};do start=`echo $i | awk -F'-' '{print $1}'` stop=`echo $i | awk -F'-' '{print $NF}'` for x in `seq $start $stop`;do irq_core_list[$n]=$x let n++ done done } function get_irq_cores_list() { if [[ ${irq_cores} != "" ]]; then parse_cpu_list ${irq_cores} elif [[ ${irq_cnt} -ge ${core_num_per_node} ]]; then ((cpu_start=${core_num_per_node}*${net_numa})) ((cpu_end=$cpu_start+${core_num_per_node}-1)) irq_cores="${cpu_start}-${cpu_end}" parse_cpu_list ${irq_cores} else ((cpu_end=${core_num_per_node}*${net_numa}+${core_num_per_node}-1)) ((cpu_start=${cpu_end}-${irq_cnt}+1)) irq_cores="${cpu_start}-${cpu_end}" parse_cpu_list ${irq_cores} fi echo "irq cpu cores:" $(printf "%s" "${irq_core_list[*]}") irq_core_list_sz=${#irq_core_list[@]} } function set_irq() { # 设置网卡队列深度为参数指定的大小,若未指定则默认设置为最大值 ethtool -L ${net_name} combined ${irq_cnt} # 根据网卡获取中断号 # irq_ids=`cat /proc/interrupts| grep -E ${bus_info} | head -n$irq_cnt | awk -F ':' '{print $1}'` irq_ids=`grep "${net_name}" /proc/interrupts | awk -F ':' '{print $1}'` irq_ids=`echo ${irq_ids}` echo "irq ids: ${irq_ids}" # 将irq_ids字符串转化为irq_id_array数组 IFS=' ' read -r -a irq_id_array <<< "${irq_ids}" } function bind_irq() { for((i=0;i<irq_cnt;i++));do irq=${irq_id_array[i]} core=${irq_core_list[$((i%irq_core_list_sz))]} echo "${i}: ${irq} -> ${core}" echo ${core} > /proc/irq/${irq}/smp_affinity_list done } get_info get_irq_cores_list set_irq bind_irq - 按“Esc”键,输入:wq!,按“Enter”保存并退出编辑。

- 创建一个名为bind_irq.sh的脚本文件。

- 执行bind_irq.sh脚本,对指定的网卡进行中断绑核。

脚本有如下三种使用方法:

- (推荐)方法一:将指定网卡中断绑定到该网卡所在的NUMA节点,并设置网卡队列数为最大值。例如网卡名称为enp131s0。

可通过ifconfig命令查看网卡名称。

bash bind_irq.sh enp131s0

- 方法二:将指定网卡中断绑定到指定的CPU核范围,并设置网卡队列数为最大值。

例如网卡名称为enp131s0的中断绑定到“18-31,60-63,92-95,124-127”范围的CPU。

可通过lscpu命令查看可以使用的CPU号范围。

bash bind_irq.sh enp131s0 "18-31,60-63,92-95,124-127"

- 方法三:将指定网卡中断绑定到指定的CPU核范围,并设置网卡队列数为指定个数。网卡队列数的指定个数需要小于等于网卡队列数最大值。

可通过ethtool -l enp131s0,查看enp131s0网卡的最大队列数。

bash bind_irq.sh enp131s0 "18-31,60-63,92-95,124-127" 32

- (推荐)方法一:将指定网卡中断绑定到该网卡所在的NUMA节点,并设置网卡队列数为最大值。例如网卡名称为enp131s0。

- 创建irqCheck.sh脚本。

- 创建irqCheck.sh脚本。

vi irqCheck.sh

- 按“i”进入编辑模式,在文件中添加以下内容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

#!/bin/bash # 网卡名 intf=$1 log=irqSet-`date "+%Y%m%d-%H%M%S"`.log # 可用的CPU数 cpuNum=$(cat /proc/cpuinfo |grep processor -c) # RX TX中断列表 irqListRx=$(cat /proc/interrupts | grep ${intf} | awk -F':' '{print $1}') irqListTx=$(cat /proc/interrupts | grep ${intf} | awk -F':' '{print $1}') # 绑定接收中断rx irq for irqRX in ${irqListRx[@]} do cat /proc/irq/${irqRX}/smp_affinity_list done # 绑定发送中断tx irq for irqTX in ${irqListTx[@]} do cat /proc/irq/${irqTX}/smp_affinity_list done

- 按“Esc”键,输入:wq!,按“Enter”保存并退出编辑。

- 创建irqCheck.sh脚本。

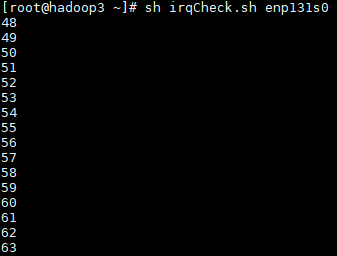

- 利用脚本查看是否绑核成功。

1sh irqCheck.sh enp131s0

预期结果示例: