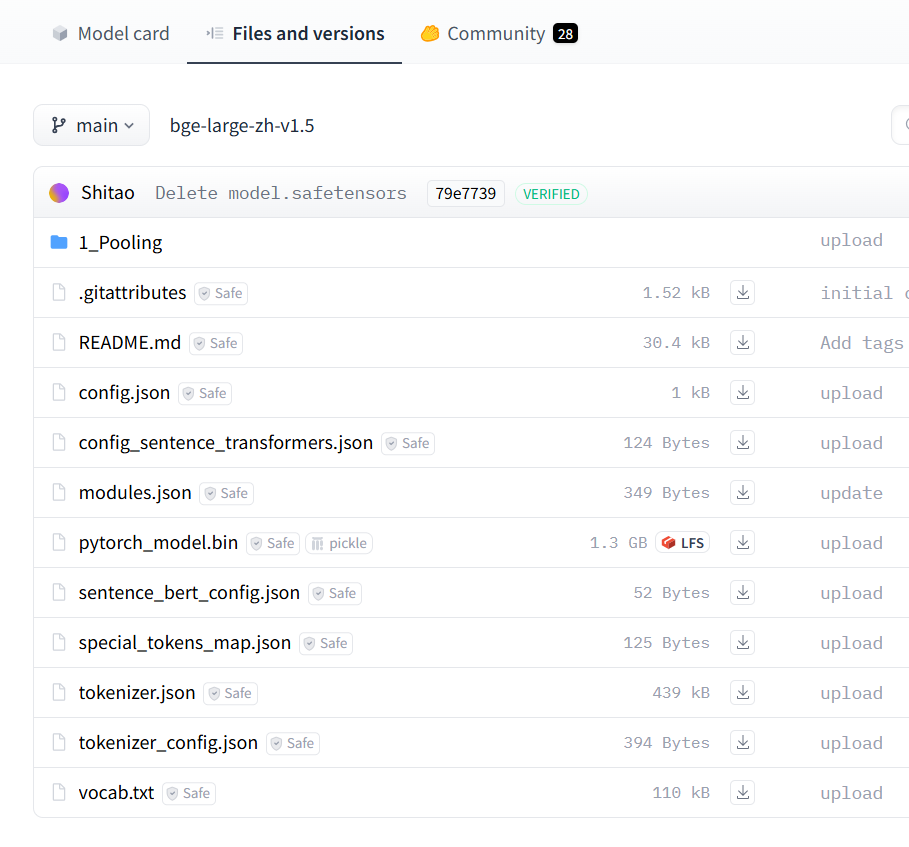

- 下载Embedding模型。

访问HuggingFace网站获取所需模型:https://huggingface.co/BAAI/bge-large-zh-v1.5/tree/main。

以BAAI/bge-large-zh-v1.5为例,下载“Files and versions”下所有文件。

- 下载mis-tei镜像。

访问链接https://www.hiascend.com/developer/ascendhub/detail/07a016975cc341f3a5ae131f2b52399d,根据NPU类型下载相应镜像。

- 运行容器。

docker run -u root -e ASCEND_VISIBLE_DEVICES=0 -itd --name=tei --net=host \ -e HOME=/home/HwHiAiUser \ -e TEI_NPU_DEVICE=0 \[可选] --privileged=true \ -v /root/bge-large-zh-v1.5:/home/HwHiAiUser/model/bge-large-zh-v1.5 \ -v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \ -v /usr/local/Ascend/driver:/usr/local/Ascend/driver \ --entrypoint /home/HwHiAiUser/start.sh \[可选,一般不加] swr.cn-south-1.myhuaweicloud.com/ascendhub/mis-tei:6.0.0-300I-Duo-aarch64 BAAI/bge-large-zh-v1.5 ip port

- --name=tei: 指定容器名称。

以下参数可根据实际情况进行配置:

- -e ASCEND_VISIBLE_DEVICES=0:指定容器运行至特定的NPU上,此处即为运行至npu_id = 0上。

- -e TEI_NPU_DEVICE=0:指定服务运行至特定的NPU上,此处即为运行至npu_id = 0上。

- -v /root/bge-large-zh-v1.5:/home/HwHiAiUser/model/bge-large-zh-v1.5:宿主机模型文件夹所在位置:容器模型安装位置,注意,容器中一定要存在bge-large-zh-v1.5 文件夹,否则将在线下载模型,可能引起容器服务化失败。

- swr.cn-south-1.myhuaweicloud.com/ascendhub/mis-tei:6.0.0-300I-Duo-aarch64 BAAI/bge-large-zh-v1.5 ip port

- swr.cn-south-1.myhuaweicloud.com/ascendhub/mis-tei:6.0.0-300I-Duo-aarch64:mis-tei镜像ID。

- BAAI/bge-large-zh-v1.5:从hugging face获取的模型ID。

- ip:用于配置服务化的IP地址。

- port:用于配置服务化端口,不要与其他容器冲突。

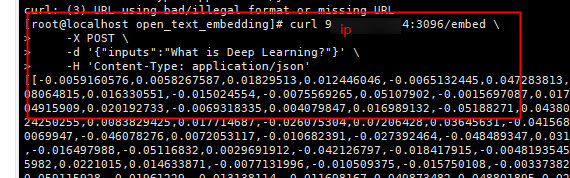

- Embedding模型新建窗口接口测试,IP地址和端口请根据实际情况进行替换。

curl ip:port/embed \ -X POST \ -d '{"inputs":"What is Deep Learning?"}' \ -H 'Content-Type: application/json'