请在ceph1上创建集群配置,引导生成Ceph容器集群,管理所有节点。

- 创建默认配置ceph.conf。

- 返回“home”目录,打开ceph.conf文件

1 2

cd /home vim ceph.conf

- 按“i”进入编辑模式,在文件中添加以下内容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

[global] mon_allow_pool_delete = true osd_pool_default_size = 3 osd_pool_default_min_size = 2 osd_pg_object_context_cache_count = 256 bluestore_kv_sync_thread_polling = true bluestore_kv_finalize_thread_polling = true osd_min_pg_log_entries = 10 osd_max_pg_log_entries = 10 osd_pool_default_pg_autoscale_mode = off bluestore_cache_size_ssd = 18G osd_memory_target = 20G # 限制osd内存的参数 bluestore_block_db_path = "" bluestore_block_db_size = 0 bluestore_block_wal_path = "" bluestore_block_wal_size = 0 bluestore_rocksdb_options = use_direct_reads=true,use_direct_io_for_flush_and_compaction=true,compression=kNoCompression,max_write_buffer_number=128,min_write_buffer_number_to_merge=32,recycle_log_file_num=64,compaction_style=kCompactionStyleLevel,write_buffer_size=4M,target_file_size_base=4M,max_background_compactions=2,level0_file_num_compaction_trigger=64,level0_slowdown_writes_trigger=128,level0_stop_writes_trigger=256,max_bytes_for_level_base=6GB,compaction_threads=2,max_bytes_for_level_multiplier=8,flusher_threads=2

- 按“Esc”键退出编辑模式,输入:wq!,按“Enter”键保存并退出文件。

- 返回“home”目录,打开ceph.conf文件

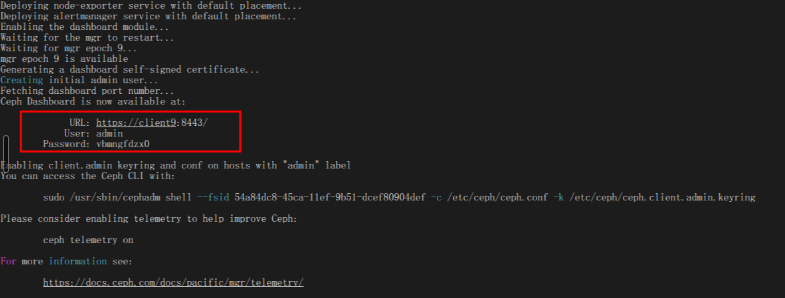

- 引导Ceph集群。

1cephadm bootstrap -c ceph.conf --mon-ip 192.168.3.166 --cluster-network 192.168.4.0/24 --skip-monitoring-stack

- --mon-ip:对应前端公共网络的IP地址。

- --cluster-network:对应后端集群网络的IP地址。

- -c ceph.conf:可选,需要修改Ceph默认配置可以使用。

- 拷贝公钥到其他节点进行使用。

1 2

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph2 ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph3

- 本地仓库配置同步到其他节点。

1 2

scp /etc/containers/registries.conf ceph2:/etc/containers/ scp /etc/containers/registries.conf ceph3:/etc/containers/

- 进入Ceph集群容器。

1cephadm shell - 添加除ceph1外的其他两个主机节点到集群中。

1 2

ceph orch host add ceph2 --labels _admin ceph orch host add ceph3 --labels _admin

命令执行完后ceph2和ceph3节点上的Ceph集群正常启动需3~5min,请耐心等待。

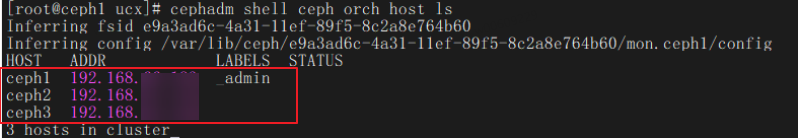

- 查看是否添加成功。

1ceph orch host ls

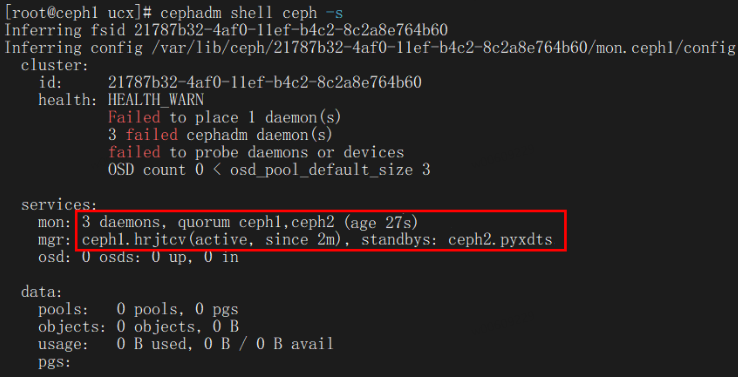

- 查看集群状态,确认其他两个节点加入集群。

1ceph -s