问题现象描述

Ceph 17.2.8集群长时间持续运行Ceph时,可能会出现OSD意外宕机又恢复的不稳定情况。并观察到类似如下报错。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | ceph crash info 2025-02-03T09:19:08.749233Z_9e2800fb-77f6-46cb-8087-203ea15a2039 { "assert_condition": "log.t.seq == log.seq_live", "assert_file": "/home/jenkins-build/build/workspace/ceph-build/ARCH/x86_64/AVAILABLE_ARCH/x86_64/AVAILABLE_DIST/centos9/DIST/centos9/MACHINE_SIZE/gigantic/release/17.2.8/rpm/el9/BUILD/c eph-17.2.8/src/os/bluestore/BlueFS.cc", "assert_func": "uint64_t BlueFS::_log_advance_seq()", "assert_line": 3029, "assert_msg": "/home/jenkins-build/build/workspace/ceph-build/ARCH/x86_64/AVAILABLE_ARCH/x86_64/AVAILABLE_DIST/centos9/DIST/centos9/MACHINE_SIZE/gigantic/release/17.2.8/rpm/el9/BUILD/ce ph-17.2.8/src/os/bluestore/BlueFS.cc: In function 'uint64_t BlueFS::_log_advance_seq()' thread 7ff983564640 time 2025-02-03T09:19:08.738781+0000\n/home/jenkins-build/build/workspace/ceph-bu ild/ARCH/x86_64/AVAILABLE_ARCH/x86_64/AVAILABLE_DIST/centos9/DIST/centos9/MACHINE_SIZE/gigantic/release/17.2.8/rpm/el9/BUILD/ceph-17.2.8/src/os/bluestore/BlueFS.cc: 3029: FAILED ceph_assert (log.t.seq == log.seq_live)\n", "assert_thread_name": "bstore_kv_sync", "backtrace": [ "/lib64/libc.so.6(+0x3e730) [0x7ff9930f5730]", "/lib64/libc.so.6(+0x8bbdc) [0x7ff993142bdc]", "raise()", "abort()", "(ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x179) [0x55882dfb7fdd]", "/usr/bin/ceph-osd(+0x36b13e) [0x55882dfb813e]", "/usr/bin/ceph-osd(+0x9cff3b) [0x55882e61cf3b]", "(BlueFS::_flush_and_sync_log_jump_D(unsigned long)+0x4e) [0x55882e6291ee]", "(BlueFS::_compact_log_async_LD_LNF_D()+0x59b) [0x55882e62e8fb]", "/usr/bin/ceph-osd(+0x9f2b15) [0x55882e63fb15]", "(BlueFS::fsync(BlueFS::FileWriter*)+0x1b9) [0x55882e631989]", "/usr/bin/ceph-osd(+0x9f4889) [0x55882e641889]", "/usr/bin/ceph-osd(+0xd74cd5) [0x55882e9c1cd5]", "(rocksdb::WritableFileWriter::SyncInternal(bool)+0x483) [0x55882eade393]", "(rocksdb::WritableFileWriter::Sync(bool)+0x120) [0x55882eae0b60]", "(rocksdb::DBImpl::WriteToWAL(rocksdb::WriteThread::WriteGroup const&, rocksdb::log::Writer*, unsigned long*, bool, bool, unsigned long)+0x337) [0x55882ea00ab7]", "(rocksdb::DBImpl::WriteImpl(rocksdb::WriteOptions const&, rocksdb::WriteBatch*, rocksdb::WriteCallback*, unsigned long*, unsigned long, bool, unsigned long*, unsigned long, rocksdb ::PreReleaseCallback*)+0x1935) [0x55882ea07675]", "(rocksdb::DBImpl::Write(rocksdb::WriteOptions const&, rocksdb::WriteBatch*)+0x35) [0x55882ea077c5]", "(RocksDBStore::submit_common(rocksdb::WriteOptions&, std::shared_ptr<KeyValueDB::TransactionImpl>)+0x83) [0x55882e992593]", "(RocksDBStore::submit_transaction_sync(std::shared_ptr<KeyValueDB::TransactionImpl>)+0x99) [0x55882e992ee9]", "(BlueStore::_kv_sync_thread()+0xf64) [0x55882e578e24]", "/usr/bin/ceph-osd(+0x8afb81) [0x55882e4fcb81]", "/lib64/libc.so.6(+0x89e92) [0x7ff993140e92]", "/lib64/libc.so.6(+0x10ef20) [0x7ff9931c5f20]" ], "ceph_version": "17.2.8", "crash_id": "2025-02-03T09:19:08.749233Z_9e2800fb-77f6-46cb-8087-203ea15a2039", "entity_name": "osd.211", "os_id": "centos", "os_name": "CentOS Stream", "os_version": "9", "os_version_id": "9", "process_name": "ceph-osd", "stack_sig": "ba90de24e2beba9c6a75249a4cce7c533987ca5127cfba5b835a3456174d6080", "timestamp": "2025-02-03T09:19:08.749233Z", "utsname_hostname": "afra-osd18", "utsname_machine": "x86_64", "utsname_release": "5.15.0-119-generic", "utsname_sysname": "Linux", "utsname_version": "#129-Ubuntu SMP Fri Aug 2 19:25:20 UTC 2024" } |

问题原因

Ceph 17.2.8已知问题,详见Ceph社区PR。

解决办法

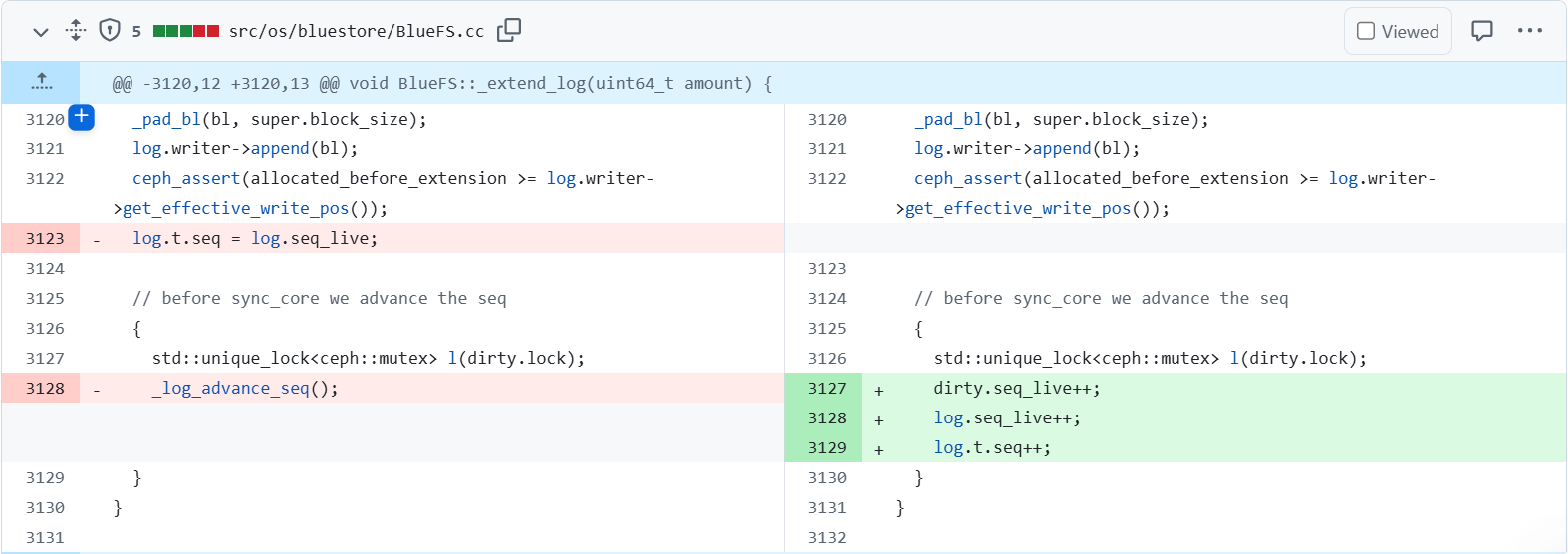

- 手动修改源码src/os/bluestore/BlueFS.cc中的3120行-3132行为如下所示。改动后源码与原始源码的对比如图1所示。

1 2 3 4 5 6 7 8 9 10 11 12

_pad_bl(bl, super.block_size); log.writer->append(bl); ceph_assert(allocated_before_extension >= log.writer->get_effective_write_pos()); // before sync_core we advance the seq { std::unique_lock<ceph::mutex> l(dirty.lock); dirty.seq_live++; log.seq_live++; log.t.seq++; } }

- 重新编译部署Ceph。