环境介绍

物理组网

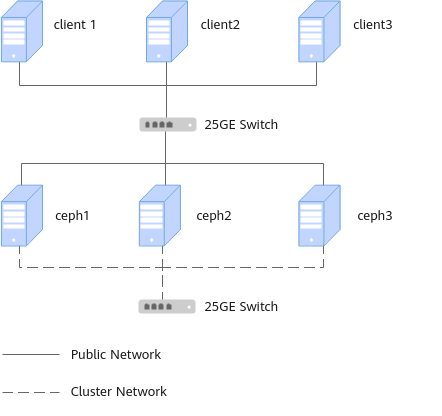

Ceph块设备物理环境采用“两层网络+三节点”的部署方案,其中,MON、MGR、MDS节点与OSD存储节点混合部署。在网络层面,Public网络与Cluster网络分离,两者均采用25GE光口来进行网络间的通信。

Ceph集群由

硬件配置

Ceph所使用的环境如表1所示。

软件版本

使用到的相关软件版本如表2所示。

节点信息

主机的IP网段规划信息如表3所示。

组件部署

对于Ceph块设备集群,其相关服务组件部署方式如表4所示。

集群检查

可以输入ceph health查看集群健康状态,显示HEALTH_OK表示集群正常。

父主题: 调优概述