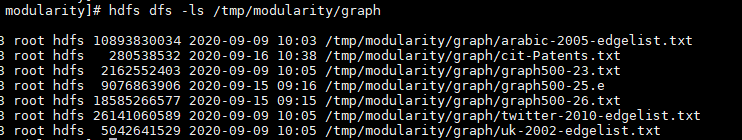

- 新建“/hdfs”目录用于保存图数据集和社区数据集。

hdfs dfs –mkdir /tmp/modularity hdfs dfs –mkdir /tmp/modularity/graph hdfs dfs –mkdir /tmp/modularity/lpa_community

- 上传图数据集到集群“/hdfs”目录下。

hdfs dfs –put graph500-25.e /tmp/modularity/graph

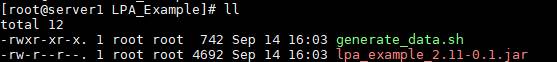

- 新建LPA_Example目录,将10中生成的lpa_examples_2.11-0.1.jar包上传到目录下,然后复制脚本内容到generate_data.sh中。

mkdir LPA_Example vim generate_data.sh ll

generate_data.sh

#/bin/bash

class="LPA"

test_jar_path="lpa_example_2.11-0.1.jar"

executor_cores=8

num_executors=35

executor_memory=25

partition=500

java_xms="-Xms25g"

echo $num_executors $executor_cores $executor_memory $partition $java_xms

eval $preprocess

cmd="spark-submit --class ${class} --master yarn --num-executors ${num_executors} --executor-memory ${executor_memory}g --executor-cores ${executor_cores} --driver-memory 80g --conf spark.executor.extraJavaOptions='${java_xms}' ${test_jar_path} /tmp/modularity/graph/graph500-25.e ' ' /tmp/modularity/lpa_community/graph500-25.txt '${partition}' "

#echo ${cmd}

eval ${cmd}

- 运行脚本。

sh generate_data.sh

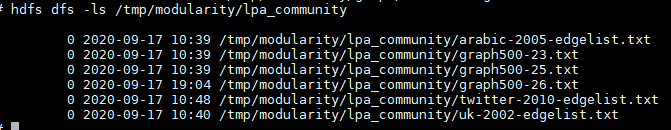

- 查看生成的社区数据集。

hdfs dfs -ls /tmp/modularity/lpa_community/