创建存储池和文件系统

CephFS需要使用两个Pool分别存储数据和元数据,本章节主要介绍如何创建fs_data和fs_metadata两个Pool。

- 在ceph1上执行以下命令创建存储池。

1 2 3

cd /root/ceph-mycluster/ ceph osd pool create fs_data 32 32 ceph osd pool create fs_metadata 8 8

- 创建存储池命令最后的两个数字,比如ceph osd pool create fs_data 32 32中的两个32分别代表存储池的pg_num和pgp_num,即存储池对应的pg和pgp数量。

- Ceph官方文档建议整个集群所有存储池的pg数量之和大约为:(OSD数量 * 100)/数据冗余因数,数据冗余因数对副本模式而言是副本数,对EC模式而言是数据块+校验块之和,例如,三副本模式下的数据冗余因数是3,EC4+2模式下的数据冗余因数是6。

- 举个例子,假设整个集群3台服务器,每台服务器12个OSD,总共36个OSD,三副本模式下的数据冗余因数是3,按照上述公式计算应为1200,一般建议pg数取2的整数次幂。由于fs_data存放的数据量远大于其他几个存储池的数据量,因此该存储池也成比例的分配更多的pg。因此,可将fs_data的pg数量取1024,fs_metadata的pg数量取128或者256。

- 更多关于pool的命令操作,可以查阅Ceph开源社区中关于pool命令的说明。

- 创建存储池命令最后的两个数字,比如ceph osd pool create fs_data 32 32中的两个32分别代表存储池的pg_num和pgp_num,即存储池对应的pg和pgp数量。

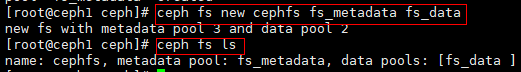

- 基于上述存储池创建新的文件系统。其中,cephfs为文件系统名称,fs_metadata和fs_data为存储池名称,注意先后顺序。

1ceph fs new cephfs fs_metadata fs_data

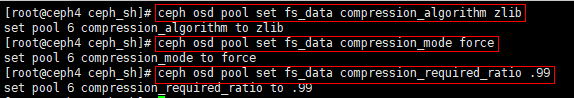

- (可选)存储池使能zlib压缩。

1 2 3

ceph osd pool set fs_data compression_algorithm zlib ceph osd pool set fs_data compression_mode force ceph osd pool set fs_data compression_required_ratio .99

- 查看创建的CephFS。

1ceph fs ls

父主题: 部署文件存储功能